ChrF Score¶

Module Interface¶

- class torchmetrics.text.CHRFScore(n_char_order=6, n_word_order=2, beta=2.0, lowercase=False, whitespace=False, return_sentence_level_score=False, **kwargs)[source]¶

Calculate chrf score of machine translated text with one or more references.

This implementation supports both ChrF score computation introduced in chrF score and chrF++ score introduced in chrF++ score. This implementation follows the implementations from https://github.com/m-popovic/chrF and https://github.com/mjpost/sacrebleu/blob/master/sacrebleu/metrics/chrf.py.

As input to

forwardandupdatethe metric accepts the following input:preds(Sequence): An iterable of hypothesis corpustarget(Sequence): An iterable of iterables of reference corpus

As output of

forwardandcomputethe metric returns the following output:chrf(Tensor): If return_sentence_level_score=True return a list of sentence-level chrF/chrF++ scores, else return a corpus-level chrF/chrF++ score

- Parameters:

n_char_order¶ (

int) – A character n-gram order. Ifn_char_order=6, the metrics refers to the official chrF/chrF++.n_word_order¶ (

int) – A word n-gram order. Ifn_word_order=2, the metric refers to the official chrF++. Ifn_word_order=0, the metric is equivalent to the original ChrF.beta¶ (

float) – parameter determining an importance of recall w.r.t. precision. Ifbeta=1, their importance is equal.lowercase¶ (

bool) – An indication whether to enable case-insensitivity.whitespace¶ (

bool) – An indication whether keep whitespaces during n-gram extraction.return_sentence_level_score¶ (

bool) – An indication whether a sentence-level chrF/chrF++ score to be returned.kwargs¶ (

Any) – Additional keyword arguments, see Advanced metric settings for more info.

- Raises:

ValueError – If

n_char_orderis not an integer greater than or equal to 1.ValueError – If

n_word_orderis not an integer greater than or equal to 0.ValueError – If

betais smaller than 0.

Example

>>> from torchmetrics.text import CHRFScore >>> preds = ['the cat is on the mat'] >>> target = [['there is a cat on the mat', 'a cat is on the mat']] >>> chrf = CHRFScore() >>> chrf(preds, target) tensor(0.8640)

- plot(val=None, ax=None)[source]¶

Plot a single or multiple values from the metric.

- Parameters:

val¶ (

Union[Tensor,Sequence[Tensor],None]) – Either a single result from calling metric.forward or metric.compute or a list of these results. If no value is provided, will automatically call metric.compute and plot that result.ax¶ (

Optional[Axes]) – An matplotlib axis object. If provided will add plot to that axis

- Return type:

- Returns:

Figure and Axes object

- Raises:

ModuleNotFoundError – If matplotlib is not installed

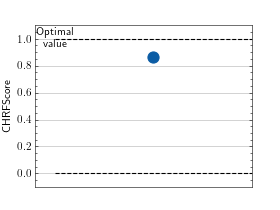

>>> # Example plotting a single value >>> from torchmetrics.text import CHRFScore >>> metric = CHRFScore() >>> preds = ['the cat is on the mat'] >>> target = [['there is a cat on the mat', 'a cat is on the mat']] >>> metric.update(preds, target) >>> fig_, ax_ = metric.plot()

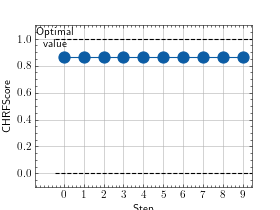

>>> # Example plotting multiple values >>> from torchmetrics.text import CHRFScore >>> metric = CHRFScore() >>> preds = ['the cat is on the mat'] >>> target = [['there is a cat on the mat', 'a cat is on the mat']] >>> values = [ ] >>> for _ in range(10): ... values.append(metric(preds, target)) >>> fig_, ax_ = metric.plot(values)

Functional Interface¶

- torchmetrics.functional.text.chrf_score(preds, target, n_char_order=6, n_word_order=2, beta=2.0, lowercase=False, whitespace=False, return_sentence_level_score=False)[source]¶

Calculate chrF score of machine translated text with one or more references.

This implementation supports both chrF score computation introduced in [1] and chrF++ score introduced in chrF++ score. This implementation follows the implementations from https://github.com/m-popovic/chrF and https://github.com/mjpost/sacrebleu/blob/master/sacrebleu/metrics/chrf.py.

- Parameters:

preds¶ (

Union[str,Sequence[str]]) – An iterable of hypothesis corpus.target¶ (

Sequence[Union[str,Sequence[str]]]) – An iterable of iterables of reference corpus.n_char_order¶ (

int) – A character n-gram order. If n_char_order=6, the metrics refers to the official chrF/chrF++.n_word_order¶ (

int) – A word n-gram order. If n_word_order=2, the metric refers to the official chrF++. If n_word_order=0, the metric is equivalent to the original chrF.beta¶ (

float) – A parameter determining an importance of recall w.r.t. precision. If beta=1, their importance is equal.lowercase¶ (

bool) – An indication whether to enable case-insensitivity.whitespace¶ (

bool) – An indication whether to keep whitespaces during character n-gram extraction.return_sentence_level_score¶ (

bool) – An indication whether a sentence-level chrF/chrF++ score to be returned.

- Return type:

- Returns:

A corpus-level chrF/chrF++ score. (Optionally) A list of sentence-level chrF/chrF++ scores if return_sentence_level_score=True.

- Raises:

ValueError – If

n_char_orderis not an integer greater than or equal to 1.ValueError – If

n_word_orderis not an integer greater than or equal to 0.ValueError – If

betais smaller than 0.

Example

>>> from torchmetrics.functional.text import chrf_score >>> preds = ['the cat is on the mat'] >>> target = [['there is a cat on the mat', 'a cat is on the mat']] >>> chrf_score(preds, target) tensor(0.8640)

References

[1] chrF: character n-gram F-score for automatic MT evaluation by Maja Popović chrF score

[2] chrF++: words helping character n-grams by Maja Popović chrF++ score