InfoLM¶

Module Interface¶

- class torchmetrics.text.infolm.InfoLM(model_name_or_path='bert-base-uncased', temperature=0.25, information_measure='kl_divergence', idf=True, alpha=None, beta=None, device=None, max_length=None, batch_size=64, num_threads=0, verbose=True, return_sentence_level_score=False, **kwargs)[source]¶

Calculate InfoLM.

InfoLM measures a distance/divergence between predicted and reference sentence discrete distribution using one of the following information measures:

L1 distance

L2 distance

L-infinity distance

InfoLM is a family of untrained embedding-based metrics which addresses some famous flaws of standard string-based metrics thanks to the usage of pre-trained masked language models. This family of metrics is mainly designed for summarization and data-to-text tasks.

The implementation of this metric is fully based HuggingFace

transformers’ package.As input to

forwardandupdatethe metric accepts the following input:preds(Sequence): An iterable of hypothesis corpustarget(Sequence): An iterable of reference corpus

As output of

forwardandcomputethe metric returns the following output:infolm(Tensor): If return_sentence_level_score=True return a tuple with a tensor with the corpus-level InfoLM score and a list of sentence-level InfoLM scores, else return a corpus-level InfoLM score

- Parameters:

model_name_or_path¶ (

Union[str,PathLike]) – A name or a model path used to loadtransformerspretrained model. By default the “bert-base-uncased” model is used.temperature¶ (

float) – A temperature for calibrating language modelling. For more information, please reference InfoLM paper.information_measure¶ (

Literal['kl_divergence','alpha_divergence','beta_divergence','ab_divergence','renyi_divergence','l1_distance','l2_distance','l_infinity_distance','fisher_rao_distance']) – A name of information measure to be used. Please use one of: [‘kl_divergence’, ‘alpha_divergence’, ‘beta_divergence’, ‘ab_divergence’, ‘renyi_divergence’, ‘l1_distance’, ‘l2_distance’, ‘l_infinity_distance’, ‘fisher_rao_distance’]idf¶ (

bool) – An indication of whether normalization using inverse document frequencies should be used.alpha¶ (

Optional[float]) – Alpha parameter of the divergence used for alpha, AB and Rényi divergence measures.beta¶ (

Optional[float]) – Beta parameter of the divergence used for beta and AB divergence measures.device¶ (

Union[device,str,None]) – A device to be used for calculation.max_length¶ (

Optional[int]) – A maximum length of input sequences. Sequences longer thanmax_lengthare to be trimmed.num_threads¶ (

int) – A number of threads to use for a dataloader.verbose¶ (

bool) – An indication of whether a progress bar to be displayed during the embeddings calculation.return_sentence_level_score¶ (

bool) – An indication whether a sentence-level InfoLM score to be returned.

Example

>>> from torchmetrics.text.infolm import InfoLM >>> preds = ['he read the book because he was interested in world history'] >>> target = ['he was interested in world history because he read the book'] >>> infolm = InfoLM('google/bert_uncased_L-2_H-128_A-2', idf=False) >>> infolm(preds, target) tensor(-0.1784)

- plot(val=None, ax=None)[source]¶

Plot a single or multiple values from the metric.

- Parameters:

val¶ (

Union[Tensor,Sequence[Tensor],None]) – Either a single result from calling metric.forward or metric.compute or a list of these results. If no value is provided, will automatically call metric.compute and plot that result.ax¶ (

Optional[Axes]) – An matplotlib axis object. If provided will add plot to that axis

- Return type:

- Returns:

Figure and Axes object

- Raises:

ModuleNotFoundError – If matplotlib is not installed

>>> # Example plotting a single value >>> from torchmetrics.text.infolm import InfoLM >>> metric = InfoLM('google/bert_uncased_L-2_H-128_A-2', idf=False) >>> preds = ['he read the book because he was interested in world history'] >>> target = ['he was interested in world history because he read the book'] >>> metric.update(preds, target) >>> fig_, ax_ = metric.plot()

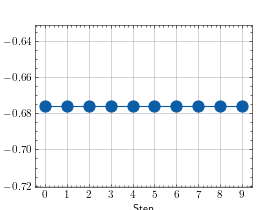

>>> # Example plotting multiple values >>> from torchmetrics.text.infolm import InfoLM >>> metric = InfoLM('google/bert_uncased_L-2_H-128_A-2', idf=False) >>> preds = ["this is the prediction", "there is an other sample"] >>> target = ["this is the reference", "there is another one"] >>> values = [ ] >>> for _ in range(10): ... values.append(metric(preds, target)) >>> fig_, ax_ = metric.plot(values)

Functional Interface¶

- torchmetrics.functional.text.infolm.infolm(preds, target, model_name_or_path='bert-base-uncased', temperature=0.25, information_measure='kl_divergence', idf=True, alpha=None, beta=None, device=None, max_length=None, batch_size=64, num_threads=0, verbose=True, return_sentence_level_score=False)[source]¶

Calculate InfoLM [1].

InfoML corresponds to distance/divergence between predicted and reference sentence discrete distribution using one of the following information measures:

L1 distance

L2 distance

L-infinity distance

InfoLM is a family of untrained embedding-based metrics which addresses some famous flaws of standard string-based metrics thanks to the usage of pre-trained masked language models. This family of metrics is mainly designed for summarization and data-to-text tasks.

If you want to use IDF scaling over the whole dataset, please use the class metric.

The implementation of this metric is fully based HuggingFace transformers’ package.

- Parameters:

preds¶ (

Union[str,Sequence[str]]) – An iterable of hypothesis corpus.target¶ (

Union[str,Sequence[str]]) – An iterable of reference corpus.model_name_or_path¶ (

Union[str,PathLike]) – A name or a model path used to load transformers pretrained model.temperature¶ (

float) – A temperature for calibrating language modelling. For more information, please reference InfoLM paper.information_measure¶ (

Literal['kl_divergence','alpha_divergence','beta_divergence','ab_divergence','renyi_divergence','l1_distance','l2_distance','l_infinity_distance','fisher_rao_distance']) – A name of information measure to be used. Please use one of: [‘kl_divergence’, ‘alpha_divergence’, ‘beta_divergence’, ‘ab_divergence’, ‘renyi_divergence’, ‘l1_distance’, ‘l2_distance’, ‘l_infinity_distance’, ‘fisher_rao_distance’]idf¶ (

bool) – An indication of whether normalization using inverse document frequencies should be used.alpha¶ (

Optional[float]) – Alpha parameter of the divergence used for alpha, AB and Rényi divergence measures.beta¶ (

Optional[float]) – Beta parameter of the divergence used for beta and AB divergence measures.device¶ (

Union[device,str,None]) – A device to be used for calculation.max_length¶ (

Optional[int]) – A maximum length of input sequences. Sequences longer than max_length are to be trimmed.num_threads¶ (

int) – A number of threads to use for a dataloader.verbose¶ (

bool) – An indication of whether a progress bar to be displayed during the embeddings calculation.return_sentence_level_score¶ (

bool) – An indication whether a sentence-level InfoLM score to be returned.

- Return type:

- Returns:

A corpus-level InfoLM score. (Optionally) A list of sentence-level InfoLM scores if return_sentence_level_score=True.

Example

>>> from torchmetrics.functional.text.infolm import infolm >>> preds = ['he read the book because he was interested in world history'] >>> target = ['he was interested in world history because he read the book'] >>> infolm(preds, target, model_name_or_path='google/bert_uncased_L-2_H-128_A-2', idf=False) tensor(-0.1784)

References

[1] InfoLM: A New Metric to Evaluate Summarization & Data2Text Generation by Pierre Colombo, Chloé Clavel and Pablo Piantanida InfoLM