Non-Intrusive Speech Quality Assessment (NISQA v2.0)¶

Module Interface¶

- class torchmetrics.audio.nisqa.NonIntrusiveSpeechQualityAssessment(fs, **kwargs)[source]¶

Non-Intrusive Speech Quality Assessment (NISQA v2.0) [1], [2].

As input to

forwardandupdatethe metric accepts the following inputpreds(Tensor): float tensor with shape(...,time)

As output of

forwardandcomputethe metric returns the following outputnisqa(Tensor): float tensor reduced across the batch with shape(5,)corresponding to overall MOS, noisiness, discontinuity, coloration and loudness in that order

Hint

Using this metric requires you to have

librosaandrequestsinstalled. Install aspip install librosa requests.Caution

The

forwardandcomputemethods in this class return values reduced across the batch. To obtain values for each sample, you may use the functional counterpartnon_intrusive_speech_quality_assessment().- Parameters:

- Raises:

ModuleNotFoundError – If

librosaorrequestsare not installed

Example

>>> import torch >>> from torchmetrics.audio import NonIntrusiveSpeechQualityAssessment >>> _ = torch.manual_seed(42) >>> preds = torch.randn(16000) >>> nisqa = NonIntrusiveSpeechQualityAssessment(16000) >>> nisqa(preds) tensor([1.0433, 1.9545, 2.6087, 1.3460, 1.7117])

References

[1] G. Mittag and S. Möller, “Non-intrusive speech quality assessment for super-wideband speech communication networks”, in Proc. ICASSP, 2019.

[2] G. Mittag, B. Naderi, A. Chehadi and S. Möller, “NISQA: A deep CNN-self-attention model for multidimensional speech quality prediction with crowdsourced datasets”, in Proc. INTERSPEECH, 2021.

- plot(val=None, ax=None)[source]¶

Plot a single or multiple values from the metric.

- Parameters:

val¶ (

Union[Tensor,Sequence[Tensor],None]) – Either a single result from callingmetric.forwardormetric.computeor a list of these results. If no value is provided, will automatically callmetric.computeand plot that result.ax¶ (

Optional[Axes]) – A matplotlib axis object. If provided will add plot to that axis

- Return type:

- Returns:

Figure and Axes object

- Raises:

ModuleNotFoundError – If

matplotlibis not installed

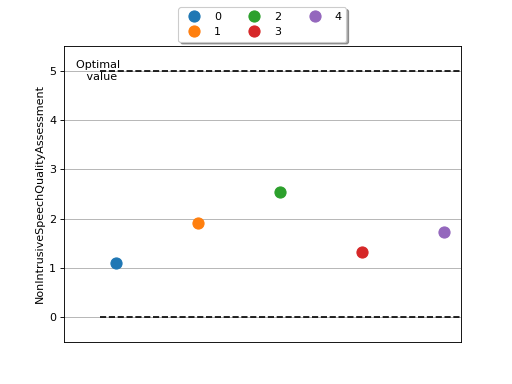

>>> # Example plotting a single value >>> import torch >>> from torchmetrics.audio import NonIntrusiveSpeechQualityAssessment >>> metric = NonIntrusiveSpeechQualityAssessment(16000) >>> metric.update(torch.randn(16000)) >>> fig_, ax_ = metric.plot()

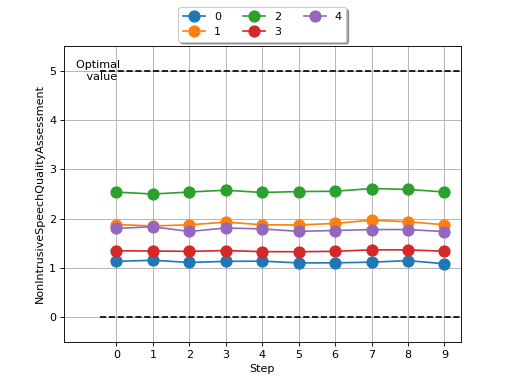

>>> # Example plotting multiple values >>> import torch >>> from torchmetrics.audio import NonIntrusiveSpeechQualityAssessment >>> metric = NonIntrusiveSpeechQualityAssessment(16000) >>> values = [] >>> for _ in range(10): ... values.append(metric(torch.randn(16000))) >>> fig_, ax_ = metric.plot(values)

Functional Interface¶

- torchmetrics.functional.audio.nisqa.non_intrusive_speech_quality_assessment(preds, fs)[source]¶

Non-Intrusive Speech Quality Assessment (NISQA v2.0) [1], [2].

Hint

Usingsing this metric requires you to have

librosaandrequestsinstalled. Install aspip install librosa requests.- Parameters:

- Return type:

- Returns:

Float tensor with shape

(...,5)corresponding to overall MOS, noisiness, discontinuity, coloration and loudness in that order- Raises:

ModuleNotFoundError – If

librosaorrequestsare not installedRuntimeError – If the input is too short, causing the number of mel spectrogram windows to be zero

RuntimeError – If the input is too long, causing the number of mel spectrogram windows to exceed the maximum allowed

Example

>>> import torch >>> from torchmetrics.functional.audio.nisqa import non_intrusive_speech_quality_assessment >>> _ = torch.manual_seed(42) >>> preds = torch.randn(16000) >>> non_intrusive_speech_quality_assessment(preds, 16000) tensor([1.0433, 1.9545, 2.6087, 1.3460, 1.7117])

References

[1] G. Mittag and S. Möller, “Non-intrusive speech quality assessment for super-wideband speech communication networks”, in Proc. ICASSP, 2019.

[2] G. Mittag, B. Naderi, A. Chehadi and S. Möller, “NISQA: A deep CNN-self-attention model for multidimensional speech quality prediction with crowdsourced datasets”, in Proc. INTERSPEECH, 2021.