Deep Noise Suppression Mean Opinion Score (DNSMOS)¶

Module Interface¶

- class torchmetrics.audio.dnsmos.DeepNoiseSuppressionMeanOpinionScore(fs, personalized, device=None, num_threads=None, cache_sessions=True, **kwargs)[source]¶

Calculate Deep Noise Suppression performance evaluation based on Mean Opinion Score (DNSMOS).

Human subjective evaluation is the ”gold standard” to evaluate speech quality optimized for human perception. Perceptual objective metrics serve as a proxy for subjective scores. The conventional and widely used metrics require a reference clean speech signal, which is unavailable in real recordings. The no-reference approaches correlate poorly with human ratings and are not widely adopted in the research community. One of the biggest use cases of these perceptual objective metrics is to evaluate noise suppression algorithms. DNSMOS generalizes well in challenging test conditions with a high correlation to human ratings in stack ranking noise suppression methods. More details can be found in DNSMOS paper and DNSMOS P.835 paper.

As input to

forwardandupdatethe metric accepts the following inputpreds(Tensor): float tensor with shape(...,time)

As output of

forwardandcomputethe metric returns the following outputdnsmos(Tensor): float tensor of DNSMOS values reduced across the batchwith shape

(...,4)indicating [p808_mos, mos_sig, mos_bak, mos_ovr] in the last dim.

Hint

Using this metric requires you to have

librosa,onnxruntimeandrequestsinstalled. Install aspip install torchmetrics['audio']or alternatively pip install librosa onnxruntime-gpu requests (if you do not have GPU enabled machine install onnxruntime instead of onnxruntime-gpu)Caution

The

forwardandcomputemethods in this class return a reduced DNSMOS value for a batch. To obtain the DNSMOS value for each sample, you may use the functional counterpart indeep_noise_suppression_mean_opinion_score().- Parameters:

personalized¶ (

bool) – whether interfering speaker is penalizeddevice¶ (

Optional[str]) – the device used for calculating DNSMOS, can be cpu or cuda:n, where n is the index of gpu. If None is given, then the device of input is used.num_threads¶ (

Optional[int]) – number of threads to use for onnxruntime CPU inference.cache_session¶ – whether to cache the onnx session. By default this is true, meaning that repeated calls to this method is faster than if this was set to False, the consequence is that the session will be cached in memory until the process is terminated.

- Raises:

ModuleNotFoundError – If

librosa,onnxruntimeorrequestspackages are not installed

Example

>>> from torch import randn >>> from torchmetrics.audio import DeepNoiseSuppressionMeanOpinionScore >>> preds = randn(8000) >>> dnsmos = DeepNoiseSuppressionMeanOpinionScore(8000, False) >>> dnsmos(preds) tensor([2.2..., 2.0..., 1.1..., 1.2...], dtype=torch.float64)

- plot(val=None, ax=None)[source]¶

Plot a single or multiple values from the metric.

- Parameters:

val¶ (

Union[Tensor,Sequence[Tensor],None]) – Either a single result from callingmetric.forwardormetric.computeor a list of these results. If no value is provided, will automatically callmetric.computeand plot that result.ax¶ (

Optional[Axes]) – A matplotlib axis object. If provided will add plot to that axis

- Return type:

- Returns:

Figure and Axes object

- Raises:

ModuleNotFoundError – If

matplotlibis not installed

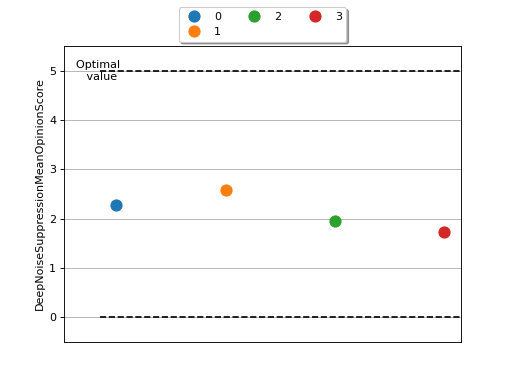

>>> # Example plotting a single value >>> import torch >>> from torchmetrics.audio import DeepNoiseSuppressionMeanOpinionScore >>> metric = DeepNoiseSuppressionMeanOpinionScore(8000, False) >>> metric.update(torch.rand(8000)) >>> fig_, ax_ = metric.plot()

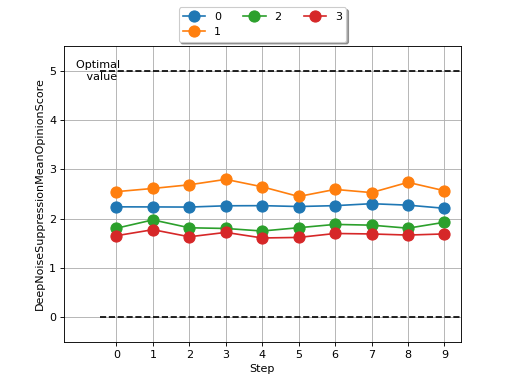

>>> # Example plotting multiple values >>> import torch >>> from torchmetrics.audio import DeepNoiseSuppressionMeanOpinionScore >>> metric = DeepNoiseSuppressionMeanOpinionScore(8000, False) >>> values = [ ] >>> for _ in range(10): ... values.append(metric(torch.rand(8000))) >>> fig_, ax_ = metric.plot(values)

Functional Interface¶

- torchmetrics.functional.audio.dnsmos.deep_noise_suppression_mean_opinion_score(preds, fs, personalized, device=None, num_threads=None, cache_session=True)[source]¶

Calculate Deep Noise Suppression performance evaluation based on Mean Opinion Score (DNSMOS).

Human subjective evaluation is the ”gold standard” to evaluate speech quality optimized for human perception. Perceptual objective metrics serve as a proxy for subjective scores. The conventional and widely used metrics require a reference clean speech signal, which is unavailable in real recordings. The no-reference approaches correlate poorly with human ratings and are not widely adopted in the research community. One of the biggest use cases of these perceptual objective metrics is to evaluate noise suppression algorithms. DNSMOS generalizes well in challenging test conditions with a high correlation to human ratings in stack ranking noise suppression methods. More details can be found in DNSMOS paper and DNSMOS P.835 paper.

Hint

Using this metric requires you to have

librosa,onnxruntimeandrequestsinstalled. Install aspip install torchmetrics['audio']or alternativelypip install librosa onnxruntime-gpu requests(if you do not have GPU enabled machine installonnxruntimeinstead ofonnxruntime-gpu)- Parameters:

personalized¶ (

bool) – whether interfering speaker is penalizeddevice¶ (

Optional[str]) – the device used for calculating DNSMOS, can be cpu or cuda:n, where n is the index of gpu. If None is given, then the device of input is used.num_threads¶ (

Optional[int]) – the number of threads to use for cpu inference. Defaults to None.cache_session¶ (

bool) – whether to cache the onnx session. By default this is true, meaning that repeated calls to this method is faster than if this was set to False, the consequence is that the session will be cached in memory until the process is terminated.

- Return type:

- Returns:

Float tensor with shape

(...,4)of DNSMOS values per sample, i.e. [p808_mos, mos_sig, mos_bak, mos_ovr]- Raises:

ModuleNotFoundError – If

librosa,onnxruntimeorrequestspackages are not installed

Example

>>> from torch import randn >>> from torchmetrics.functional.audio.dnsmos import deep_noise_suppression_mean_opinion_score >>> preds = randn(8000) >>> deep_noise_suppression_mean_opinion_score(preds, 8000, False) tensor([2.2..., 2.0..., 1.1..., 1.2...], dtype=torch.float64)