Calinski Harabasz Score¶

Module Interface¶

- class torchmetrics.clustering.CalinskiHarabaszScore(**kwargs)[source]¶

Compute Calinski Harabasz Score (also known as variance ratio criterion) for clustering algorithms.

\[CHS(X, L) = \frac{B(X, L) \cdot (n_\text{samples} - n_\text{labels})}{W(X, L) \cdot (n_\text{labels} - 1)}\]where \(B(X, L)\) is the between-cluster dispersion, which is the squared distance between the cluster centers and the dataset mean, weighted by the size of the clusters, \(n_\text{samples}\) is the number of samples, \(n_\text{labels}\) is the number of labels, and \(W(X, L)\) is the within-cluster dispersion e.g. the sum of squared distances between each samples and its closest cluster center.

This clustering metric is an intrinsic measure, because it does not rely on ground truth labels for the evaluation. Instead it examines how well the clusters are separated from each other. The score is higher when clusters are dense and well separated, which relates to a standard concept of a cluster.

As input to

forwardandupdatethe metric accepts the following input:data(Tensor): float tensor with shape(N,d)with the embedded data.dis the dimensionality of the embedding space.labels(Tensor): single integer tensor with shape(N,)with cluster labels

As output of

forwardandcomputethe metric returns the following output:chs(Tensor): A tensor with the Calinski Harabasz Score

- Parameters:

kwargs¶ (

Any) – Additional keyword arguments, see Advanced metric settings for more info.

- Example::

>>> from torch import randn, randint >>> from torchmetrics.clustering import CalinskiHarabaszScore >>> data = randn(20, 3) >>> labels = randint(3, (20,)) >>> metric = CalinskiHarabaszScore() >>> metric(data, labels) tensor(2.2128)

- plot(val=None, ax=None)[source]¶

Plot a single or multiple values from the metric.

- Parameters:

val¶ (

Union[Tensor,Sequence[Tensor],None]) – Either a single result from calling metric.forward or metric.compute or a list of these results. If no value is provided, will automatically call metric.compute and plot that result.ax¶ (

Optional[Axes]) – An matplotlib axis object. If provided will add plot to that axis

- Return type:

- Returns:

Figure and Axes object

- Raises:

ModuleNotFoundError – If matplotlib is not installed

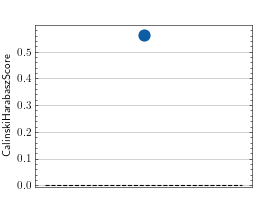

>>> # Example plotting a single value >>> import torch >>> from torchmetrics.clustering import CalinskiHarabaszScore >>> metric = CalinskiHarabaszScore() >>> metric.update(torch.randn(20, 3), torch.randint(3, (20,))) >>> fig_, ax_ = metric.plot(metric.compute())

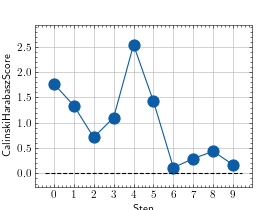

>>> # Example plotting multiple values >>> import torch >>> from torchmetrics.clustering import CalinskiHarabaszScore >>> metric = CalinskiHarabaszScore() >>> values = [ ] >>> for _ in range(10): ... values.append(metric(torch.randn(20, 3), torch.randint(3, (20,)))) >>> fig_, ax_ = metric.plot(values)

Functional Interface¶

- torchmetrics.functional.clustering.calinski_harabasz_score(data, labels)[source]¶

Compute the Calinski Harabasz Score (also known as variance ratio criterion) for clustering algorithms.

- Parameters:

- Return type:

- Returns:

Scalar tensor with the Calinski Harabasz Score

Example

>>> from torch import randn, randint >>> from torchmetrics.functional.clustering import calinski_harabasz_score >>> data = randn(20, 3) >>> labels = randint(0, 3, (20,)) >>> calinski_harabasz_score(data, labels) tensor(2.2128)