CLIP Score¶

Module Interface¶

- class torchmetrics.multimodal.clip_score.CLIPScore(model_name_or_path='openai/clip-vit-large-patch14', **kwargs)[source]¶

Calculates CLIP Score which is a text-to-image similarity metric.

CLIP Score is a reference free metric that can be used to evaluate the correlation between a generated caption for an image and the actual content of the image, as well as the similarity between texts or images. It has been found to be highly correlated with human judgement. The metric is defined as:

\[\text{CLIPScore(I, C)} = max(100 * cos(E_I, E_C), 0)\]which corresponds to the cosine similarity between visual CLIP embedding \(E_i\) for an image \(i\) and textual CLIP embedding \(E_C\) for an caption \(C\). The score is bound between 0 and 100 and the closer to 100 the better.

Additionally, the CLIP Score can be calculated for the same modalities:

\[\text{CLIPScore(I_1, I_2)} = max(100 * cos(E_{I_1}, E_{I_2}), 0)\]where \(E_{I_1}\) and \(E_{I_2}\) are the visual embeddings for images \(I_1\) and \(I_2\).

\[\text{CLIPScore(T_1, T_2)} = max(100 * cos(E_{T_1}, E_{T_2}), 0)\]where \(E_{T_1}\) and \(E_{T_2}\) are the textual embeddings for texts \(T_1\) and \(T_2\).

Caution

Metric is not scriptable

Note

The default CLIP and processor used in this implementation has a maximum sequence length of 77 for text inputs. If you need to process longer captions, you can use the zer0int/LongCLIP-L-Diffusers model which has a maximum sequence length of 248.

As input to

forwardandupdatethe metric accepts the following inputsource: Source input.

This can be:

Images:

Tensoror list ofTensorIf a single tensor, it should have shape

(N, C, H, W). If a list of tensors, each tensor should have shape(C, H, W).Cis the number of channels,HandWare the height and width of the image.Text:

stror list ofstrEither a single caption or a list of captions.

target: Target input.

This can be:

Images:

Tensoror list ofTensorIf a single tensor, it should have shape

(N, C, H, W). If a list of tensors, each tensor should have shape(C, H, W).Cis the number of channels,HandWare the height and width of the image.Text:

stror list ofstrEither a single caption or a list of captions.

As output of forward and compute the metric returns the following output

clip_score(Tensor): float scalar tensor with mean CLIP score over samples

- Parameters:

model_name_or_path¶ (

Union[Literal['openai/clip-vit-base-patch16','openai/clip-vit-base-patch32','openai/clip-vit-large-patch14-336','openai/clip-vit-large-patch14','jinaai/jina-clip-v2','zer0int/LongCLIP-L-Diffusers','zer0int/LongCLIP-GmP-ViT-L-14'],Callable[[],tuple[None,None]]]) –string indicating the version of the CLIP model to use. Available models are:

”openai/clip-vit-base-patch16”

”openai/clip-vit-base-patch32”

”openai/clip-vit-large-patch14-336”

”openai/clip-vit-large-patch14”

”jinaai/jina-clip-v2”

”zer0int/LongCLIP-L-Diffusers”

”zer0int/LongCLIP-GmP-ViT-L-14”

Alternatively, a callable function that returns a tuple of CLIP compatible model and processor instances can be passed in. By compatible, we mean that the processors __call__ method should accept a list of strings and list of images and that the model should have a get_image_features and get_text_features methods.

kwargs¶ (

Any) – Additional keyword arguments, see Advanced metric settings for more info.

- Raises:

ModuleNotFoundError – If transformers package is not installed or version is lower than 4.10.0

Example

>>> from torchmetrics.multimodal.clip_score import CLIPScore >>> metric = CLIPScore(model_name_or_path="openai/clip-vit-base-patch16") >>> image = torch.randint(255, (3, 224, 224), generator=torch.Generator().manual_seed(42)) >>> score = metric(image, "a photo of a cat") >>> score.detach().round() tensor(24.)

Example

>>> from torchmetrics.multimodal.clip_score import CLIPScore >>> metric = CLIPScore(model_name_or_path="openai/clip-vit-base-patch16") >>> image1 = torch.randint(255, (3, 224, 224), generator=torch.Generator().manual_seed(42)) >>> image2 = torch.randint(255, (3, 224, 224), generator=torch.Generator().manual_seed(43)) >>> score = metric(image1, image2) >>> score.detach().round() tensor(99.)

Example

>>> from torchmetrics.multimodal.clip_score import CLIPScore >>> metric = CLIPScore(model_name_or_path="openai/clip-vit-base-patch16") >>> score = metric("28-year-old chef found dead in San Francisco mall", ... "A 28-year-old chef who recently moved to San Francisco was found dead.") >>> score.detach().round() tensor(91.)

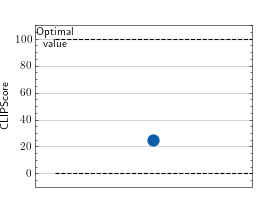

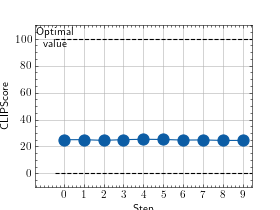

- plot(val=None, ax=None)[source]¶

Plot a single or multiple values from the metric.

- Parameters:

val¶ (

Union[Tensor,Sequence[Tensor],None]) – Either a single result from calling metric.forward or metric.compute or a list of these results. If no value is provided, will automatically call metric.compute and plot that result.ax¶ (

Optional[Axes]) – An matplotlib axis object. If provided will add plot to that axis

- Return type:

- Returns:

Figure and Axes object

- Raises:

ModuleNotFoundError – If matplotlib is not installed

>>> # Example plotting a single value >>> import torch >>> from torchmetrics.multimodal.clip_score import CLIPScore >>> metric = CLIPScore(model_name_or_path="openai/clip-vit-base-patch16") >>> metric.update(torch.randint(255, (3, 224, 224)), "a photo of a cat") >>> fig_, ax_ = metric.plot()

>>> # Example plotting multiple values >>> import torch >>> from torchmetrics.multimodal.clip_score import CLIPScore >>> metric = CLIPScore(model_name_or_path="openai/clip-vit-base-patch16") >>> values = [ ] >>> for _ in range(10): ... values.append(metric(torch.randint(255, (3, 224, 224)), "a photo of a cat")) >>> fig_, ax_ = metric.plot(values)

- update(source, target)[source]¶

Update CLIP score on a batch of images and text.

- Parameters:

source¶ (

Union[Tensor,List[Tensor],List[str],str]) – Source input. This can be: - Images: Either a single [N, C, H, W] tensor or a list of [C, H, W] tensors. - Text: Either a single caption or a list of captions.target¶ (

Union[Tensor,List[Tensor],List[str],str]) – Target input. This can be: - Images: Either a single [N, C, H, W] tensor or a list of [C, H, W] tensors. - Text: Either a single caption or a list of captions.

- Raises:

ValueError – If not all images have format [C, H, W]

ValueError – If the number of images and captions do not match

- Return type:

Functional Interface¶

- torchmetrics.functional.multimodal.clip_score.clip_score(source, target, model_name_or_path='openai/clip-vit-large-patch14')[source]¶

Calculates CLIP Score which is a text-to-image similarity metric.

CLIP Score is a reference free metric that can be used to evaluate the correlation between a generated caption for an image and the actual content of the image, as well as the similarity between texts or images. It has been found to be highly correlated with human judgement. The metric is defined as:

\[\text{CLIPScore(I, C)} = max(100 * cos(E_I, E_C), 0)\]which corresponds to the cosine similarity between visual CLIP embedding \(E_i\) for an image \(i\) and textual CLIP embedding \(E_C\) for an caption \(C\). The score is bound between 0 and 100 and the closer to 100 the better.

Additionally, the CLIP Score can be calculated for the same modalities:

\[\text{CLIPScore(I_1, I_2)} = max(100 * cos(E_{I_1}, E_{I_2}), 0)\]where \(E_{I_1}\) and \(E_{I_2}\) are the visual embeddings for images \(I_1\) and \(I_2\).

\[\text{CLIPScore(T_1, T_2)} = max(100 * cos(E_{T_1}, E_{T_2}), 0)\]where \(E_{T_1}\) and \(E_{T_2}\) are the textual embeddings for texts \(T_1\) and \(T_2\).

Note

Metric is not scriptable

Note

The default CLIP and processor used in this implementation has a maximum sequence length of 77 for text inputs. If you need to process longer captions, you can use the zer0int/LongCLIP-L-Diffusers model which has a maximum sequence length of 248.

- Parameters:

source¶ (

Union[Tensor,List[Tensor],List[str],str]) – Source input. This can be: - Images: Either a single [N, C, H, W] tensor or a list of [C, H, W] tensors. - Text: Either a single caption or a list of captions.target¶ (

Union[Tensor,List[Tensor],List[str],str]) – Target input. This can be: - Images: Either a single [N, C, H, W] tensor or a list of [C, H, W] tensors. - Text: Either a single caption or a list of captions.model_name_or_path¶ (

Union[Literal['openai/clip-vit-base-patch16','openai/clip-vit-base-patch32','openai/clip-vit-large-patch14-336','openai/clip-vit-large-patch14','jinaai/jina-clip-v2','zer0int/LongCLIP-L-Diffusers','zer0int/LongCLIP-GmP-ViT-L-14'],Callable[[],tuple[None,None]]]) –String indicating the version of the CLIP model to use. Available models are: - “openai/clip-vit-base-patch16” - “openai/clip-vit-base-patch32” - “openai/clip-vit-large-patch14-336” - “openai/clip-vit-large-patch14” - “jinaai/jina-clip-v2” - “zer0int/LongCLIP-L-Diffusers” - “zer0int/LongCLIP-GmP-ViT-L-14”

Alternatively, a callable function that returns a tuple of CLIP compatible model and processor instances can be passed in. By compatible, we mean that the processors __call__ method should accept a list of strings and list of images and that the model should have a get_image_features and get_text_features methods.

- Raises:

ModuleNotFoundError – If transformers package is not installed or version is lower than 4.10.0

ValueError – If not all images have format [C, H, W]

ValueError – If the number of images and captions do not match

- Return type:

Example

>>> from torchmetrics.functional.multimodal import clip_score >>> image = torch.randint(255, (3, 224, 224), generator=torch.Generator().manual_seed(42)) >>> score = clip_score(image, "a photo of a cat", "openai/clip-vit-base-patch16") >>> score.detach().round(decimals=3) tensor(24.4260)

Example

>>> from torchmetrics.functional.multimodal import clip_score >>> image1 = torch.randint(255, (3, 224, 224), generator=torch.Generator().manual_seed(42)) >>> image2 = torch.randint(255, (3, 224, 224), generator=torch.Generator().manual_seed(43)) >>> score = clip_score(image1, image2, "openai/clip-vit-base-patch16") >>> score.detach().round(decimals=3) tensor(99.4860)

Example

>>> from torchmetrics.functional.multimodal import clip_score >>> score = clip_score( ... "28-year-old chef found dead in San Francisco mall", ... "A 28-year-old chef who recently moved to San Francisco was found dead.", ... "openai/clip-vit-base-patch16" ... ) >>> score.detach().round(decimals=3) tensor(91.3950)