Translation Edit Rate (TER)¶

Module Interface¶

- class torchmetrics.text.TranslationEditRate(normalize=False, no_punctuation=False, lowercase=True, asian_support=False, return_sentence_level_score=False, **kwargs)[source]¶

Calculate Translation edit rate (TER) of machine translated text with one or more references.

This implementation follows the one from SacreBleu_ter, which is a near-exact reimplementation of the Tercom algorithm, produces identical results on all “sane” outputs.

As input to

forwardandupdatethe metric accepts the following input:preds(Sequence): An iterable of hypothesis corpustarget(Sequence): An iterable of iterables of reference corpus

As output of

forwardandcomputethe metric returns the following output:ter(Tensor): ifreturn_sentence_level_score=Truereturn a corpus-level translation edit rate with a list of sentence-level translation_edit_rate, else return a corpus-level translation edit rate

- Parameters:

normalize¶ (

bool) – An indication whether a general tokenization to be applied.no_punctuation¶ (

bool) – An indication whteher a punctuation to be removed from the sentences.lowercase¶ (

bool) – An indication whether to enable case-insensitivity.asian_support¶ (

bool) – An indication whether asian characters to be processed.return_sentence_level_score¶ (

bool) – An indication whether a sentence-level TER to be returned.kwargs¶ (

Any) – Additional keyword arguments, see Advanced metric settings for more info.

Example

>>> from torchmetrics.text import TranslationEditRate >>> preds = ['the cat is on the mat'] >>> target = [['there is a cat on the mat', 'a cat is on the mat']] >>> ter = TranslationEditRate() >>> ter(preds, target) tensor(0.1538)

- plot(val=None, ax=None)[source]¶

Plot a single or multiple values from the metric.

- Parameters:

val¶ (

Union[Tensor,Sequence[Tensor],None]) – Either a single result from calling metric.forward or metric.compute or a list of these results. If no value is provided, will automatically call metric.compute and plot that result.ax¶ (

Optional[Axes]) – An matplotlib axis object. If provided will add plot to that axis

- Return type:

- Returns:

Figure and Axes object

- Raises:

ModuleNotFoundError – If matplotlib is not installed

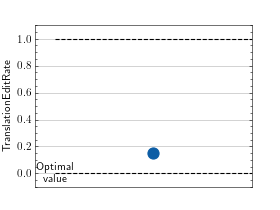

>>> # Example plotting a single value >>> from torchmetrics.text import TranslationEditRate >>> metric = TranslationEditRate() >>> preds = ['the cat is on the mat'] >>> target = [['there is a cat on the mat', 'a cat is on the mat']] >>> metric.update(preds, target) >>> fig_, ax_ = metric.plot()

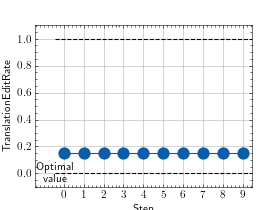

>>> # Example plotting multiple values >>> from torchmetrics.text import TranslationEditRate >>> metric = TranslationEditRate() >>> preds = ['the cat is on the mat'] >>> target = [['there is a cat on the mat', 'a cat is on the mat']] >>> values = [ ] >>> for _ in range(10): ... values.append(metric(preds, target)) >>> fig_, ax_ = metric.plot(values)

Functional Interface¶

- torchmetrics.functional.text.translation_edit_rate(preds, target, normalize=False, no_punctuation=False, lowercase=True, asian_support=False, return_sentence_level_score=False)[source]¶

Calculate Translation edit rate (TER) of machine translated text with one or more references.

This implementation follows the implementations from https://github.com/mjpost/sacrebleu/blob/master/sacrebleu/metrics/ter.py. The sacrebleu implementation is a near-exact reimplementation of the Tercom algorithm, produces identical results on all “sane” outputs.

- Parameters:

preds¶ (

Union[str,Sequence[str]]) – An iterable of hypothesis corpus.target¶ (

Sequence[Union[str,Sequence[str]]]) – An iterable of iterables of reference corpus.normalize¶ (

bool) – An indication whether a general tokenization to be applied.no_punctuation¶ (

bool) – An indication whteher a punctuation to be removed from the sentences.lowercase¶ (

bool) – An indication whether to enable case-insensitivity.asian_support¶ (

bool) – An indication whether asian characters to be processed.return_sentence_level_score¶ (

bool) – An indication whether a sentence-level TER to be returned.

- Return type:

- Returns:

A corpus-level translation edit rate (TER). (Optionally) A list of sentence-level translation_edit_rate (TER) if return_sentence_level_score=True.

Example

>>> preds = ['the cat is on the mat'] >>> target = [['there is a cat on the mat', 'a cat is on the mat']] >>> translation_edit_rate(preds, target) tensor(0.1538)

References

[1] A Study of Translation Edit Rate with Targeted Human Annotation by Mathew Snover, Bonnie Dorr, Richard Schwartz, Linnea Micciulla and John Makhoul TER