Dice Score¶

Module Interface¶

- class torchmetrics.segmentation.DiceScore(num_classes, include_background=True, average='micro', aggregation_level='samplewise', input_format='one-hot', **kwargs)[source]

Compute Dice Score.

The metric can be used to evaluate the performance of image segmentation models. The Dice Score is defined as:

- ..math::

DS = frac{2 sum_{i=1}^{N} t_i p_i}{sum_{i=1}^{N} t_i + sum_{i=1}^{N} p_i}

where \(N\) is the number of classes, \(t_i\) is the target tensor, and \(p_i\) is the prediction tensor. In general the Dice Score can be interpreted as the overlap between the prediction and target tensors divided by the total number of elements in the tensors.

As input to

forwardandupdatethe metric accepts the following input:preds(Tensor): An one-hot boolean tensor of shape(N, C, ...)withNbeing the number of samples andCthe number of classes. Alternatively, an integer tensor of shape(N, ...)can be provided, where the integer values correspond to the class index. The input type can be controlled with theinput_formatargument.target(Tensor): An one-hot boolean tensor of shape(N, C, ...)withNbeing the number of samples andCthe number of classes. Alternatively, an integer tensor of shape(N, ...)can be provided, where the integer values correspond to the class index. The input type can be controlled with theinput_formatargument.

As output to

forwardandcomputethe metric returns the following output:gds(Tensor): The dice score. Ifaverageis set toNoneor"none"the output will be a tensor of shape(C,)with the dice score for each class. Ifaverageis set to"micro","macro", or"weighted"the output will be a scalar tensor. The score is an average over all samples.

- Parameters:

num_classes¶ (

int) – The number of classes in the segmentation problem.include_background¶ (

bool) – Whether to include the background class in the computation.average¶ (

Optional[Literal['micro','macro','weighted','none']]) – The method to average the dice score. Options are"micro","macro","weighted","none"orNone. This determines how to average the dice score across different classes.aggregation_level¶ (

Optional[Literal['samplewise','global']]) – The level at which to aggregate the dice score. Options are"samplewise"or"global". For"samplewise"the dice score is computed for each sample and then averaged. For"global"the dice score is computed globally over all samples.input_format¶ (

Literal['one-hot','index','mixed']) – What kind of input the function receives. Choose between"one-hot"for one-hot encoded tensors,"index"for index tensors or"mixed"for one one-hot encoded and one index tensorzero_division¶ – The value to return when there is a division by zero. Options are 1.0, 0.0, “warn” or “nan”. Setting it to “warn” behaves like 0.0 but will also create a warning.

kwargs¶ (

Any) – Additional keyword arguments, see Advanced metric settings for more info.

- Raises:

ValueError – If

num_classesis not a positive integerValueError – If

include_backgroundis not a booleanValueError – If

averageis not one of"micro","macro","weighted","none"orNoneValueError – If

input_formatis not one of"one-hot","index"or"mixed"

Example

>>> from torch import randint >>> from torchmetrics.segmentation import DiceScore >>> preds = randint(0, 2, (4, 5, 16, 16)) # 4 samples, 5 classes, 16x16 prediction >>> target = randint(0, 2, (4, 5, 16, 16)) # 4 samples, 5 classes, 16x16 target >>> dice_score = DiceScore(num_classes=5, average="micro") >>> dice_score(preds, target) tensor(0.4941) >>> dice_score = DiceScore(num_classes=5, average="none") >>> dice_score(preds, target) tensor([0.4860, 0.4999, 0.5014, 0.4885, 0.4915])

- plot(val=None, ax=None)[source]

Plot a single or multiple values from the metric.

- Parameters:

val¶ (

Union[Tensor,Sequence[Tensor],None]) – Either a single result from calling metric.forward or metric.compute or a list of these results. If no value is provided, will automatically call metric.compute and plot that result.ax¶ (

Optional[Axes]) – An matplotlib axis object. If provided will add plot to that axis

- Return type:

- Returns:

Figure and Axes object

- Raises:

ModuleNotFoundError – If matplotlib is not installed

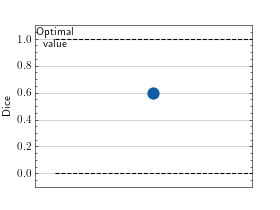

>>> # Example plotting a single value >>> import torch >>> from torchmetrics.segmentation import DiceScore >>> metric = DiceScore(num_classes=3) >>> metric.update(torch.randint(0, 2, (10, 3, 128, 128)), torch.randint(0, 2, (10, 3, 128, 128))) >>> fig_, ax_ = metric.plot()

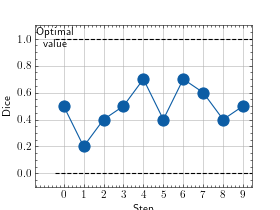

>>> # Example plotting multiple values >>> import torch >>> from torchmetrics.segmentation import DiceScore >>> metric = DiceScore(num_classes=3) >>> values = [ ] >>> for _ in range(10): ... values.append( ... metric(torch.randint(0, 2, (10, 3, 128, 128)), torch.randint(0, 2, (10, 3, 128, 128))) ... ) >>> fig_, ax_ = metric.plot(values)

Functional Interface¶

- torchmetrics.functional.segmentation.dice_score(preds, target, num_classes, include_background=True, average='micro', input_format='one-hot', aggregation_level='samplewise')[source]

Compute the Dice score for semantic segmentation.

- Parameters:

include_background¶ (

bool) – Whether to include the background class in the computationaverage¶ (

Optional[Literal['micro','macro','weighted','none']]) – The method to average the dice score. Options are"micro","macro","weighted","none"orNone. This determines how to average the dice score across different classes.input_format¶ (

Literal['one-hot','index','mixed']) – What kind of input the function receives. Choose between"one-hot"for one-hot encoded tensors,"index"for index tensors or"mixed"for one one-hot encoded and one index tensoraggregation_level¶ (

Optional[Literal['samplewise','global']]) – The level at which to aggregate the dice score. Options are"samplewise"or"global". For"samplewise"the dice score is computed for each sample and then averaged. For"global"the dice score is computed globally over all samples.

- Return type:

- Returns:

The Dice score.

- Example (with one-hot encoded tensors):

>>> from torch import randint >>> from torchmetrics.functional.segmentation import dice_score >>> _ = torch.manual_seed(42) >>> preds = randint(0, 2, (4, 5, 16, 16)) # 4 samples, 5 classes, 16x16 prediction >>> target = randint(0, 2, (4, 5, 16, 16)) # 4 samples, 5 classes, 16x16 target >>> # dice score micro averaged over all classes >>> dice_score(preds, target, num_classes=5, average="micro") tensor([0.4842, 0.4968, 0.5053, 0.4902]) >>> # dice score per sample and class >>> dice_score(preds, target, num_classes=5, average="none") tensor([[0.4724, 0.5185, 0.4710, 0.5062, 0.4500], [0.4571, 0.4980, 0.5191, 0.4380, 0.5649], [0.5428, 0.4904, 0.5358, 0.4830, 0.4724], [0.4715, 0.4925, 0.4797, 0.5267, 0.4788]]) >>> # global dice score over all samples with macro averaging >>> dice_score(preds, target, num_classes=5, average="macro", aggregation_level="global") tensor([0.4942])

- Example (with index tensors):

>>> from torch import randint >>> from torchmetrics.functional.segmentation import dice_score >>> _ = torch.manual_seed(42) >>> preds = randint(0, 5, (4, 16, 16)) # 4 samples, 5 classes, 16x16 prediction >>> target = randint(0, 5, (4, 16, 16)) # 4 samples, 5 classes, 16x16 target >>> # dice score micro averaged over all classes >>> dice_score(preds, target, num_classes=5, average="micro", input_format="index") tensor([0.2031, 0.1914, 0.2266, 0.1641]) >>> # dice score per sample and class >>> dice_score(preds, target, num_classes=5, average="none", input_format="index") tensor([[0.1731, 0.1667, 0.2400, 0.2424, 0.1947], [0.2245, 0.2247, 0.2321, 0.1132, 0.1682], [0.2500, 0.2476, 0.1887, 0.1818, 0.2718], [0.1308, 0.1800, 0.1980, 0.1607, 0.1522]]) >>> # global dice score over all samples with macro averaging >>> dice_score(preds, target, num_classes=5, average="macro", aggregation_level="global", input_format="index") tensor([0.1965])