Metric Tracker¶

Module Interface¶

- class torchmetrics.wrappers.MetricTracker(metric, maximize=None)[source]¶

A wrapper class that can help keeping track of a metric or metric collection over time.

The wrapper implements the standard

.update(),.compute(),.reset()methods that just calls corresponding method of the currently tracked metric. However, the following additional methods are provided:-

MetricTracker.n_steps: number of metrics being tracked -MetricTracker.increment(): initialize a new metric for being tracked -MetricTracker.compute_all(): get the metric value for all steps -MetricTracker.best_metric(): returns the best valueOut of the box, this wrapper class fully supports that the base metric being tracked is a single Metric, a MetricCollection or another MetricWrapper wrapped around a metric. However, multiple layers of nesting, such as using a Metric inside a MetricWrapper inside a MetricCollection is not fully supported, especially the .best_metric method that cannot auto compute the best metric and index for such nested structures.

- Parameters:

metric¶ (

Union[Metric,MetricCollection]) – instance of atorchmetrics.Metricortorchmetrics.MetricCollectionto keep track of at each timestep.maximize¶ (

Union[bool,list[bool],None]) – either single bool or list of bool indicating if higher metric values are better (True) or lower is better (False).

- Example (single metric):

>>> from torch import randint >>> from torchmetrics.wrappers import MetricTracker >>> from torchmetrics.classification import MulticlassAccuracy >>> tracker = MetricTracker(MulticlassAccuracy(num_classes=10, average='micro')) >>> for epoch in range(5): ... tracker.increment() ... for batch_idx in range(5): ... tracker.update(randint(10, (100,)), randint(10, (100,))) ... print(f"current acc={tracker.compute()}") current acc=0.1120000034570694 current acc=0.08799999952316284 current acc=0.12600000202655792 current acc=0.07999999821186066 current acc=0.10199999809265137 >>> best_acc, which_epoch = tracker.best_metric(return_step=True) >>> best_acc 0.1260... >>> which_epoch 2 >>> tracker.compute_all() tensor([0.1120, 0.0880, 0.1260, 0.0800, 0.1020])

- Example (multiple metrics using MetricCollection):

>>> from torch import randn >>> from torchmetrics.wrappers import MetricTracker >>> from torchmetrics import MetricCollection >>> from torchmetrics.regression import MeanSquaredError, ExplainedVariance >>> tracker = MetricTracker(MetricCollection([MeanSquaredError(), ExplainedVariance()]), maximize=[False, True]) >>> for epoch in range(5): ... tracker.increment() ... for batch_idx in range(5): ... tracker.update(randn(100), randn(100)) ... print(f"current stats={tracker.compute()}") current stats={'MeanSquaredError': tensor(2.3292), 'ExplainedVariance': tensor(-0.9516)} current stats={'MeanSquaredError': tensor(2.1370), 'ExplainedVariance': tensor(-1.0775)} current stats={'MeanSquaredError': tensor(2.1695), 'ExplainedVariance': tensor(-0.9945)} current stats={'MeanSquaredError': tensor(2.1072), 'ExplainedVariance': tensor(-1.1878)} current stats={'MeanSquaredError': tensor(2.0562), 'ExplainedVariance': tensor(-1.0754)} >>> from pprint import pprint >>> best_res, which_epoch = tracker.best_metric(return_step=True) >>> pprint(best_res) {'ExplainedVariance': -0.951..., 'MeanSquaredError': 2.056...} >>> which_epoch {'MeanSquaredError': 4, 'ExplainedVariance': 0} >>> pprint(tracker.compute_all()) {'ExplainedVariance': tensor([-0.9516, -1.0775, -0.9945, -1.1878, -1.0754]), 'MeanSquaredError': tensor([2.3292, 2.1370, 2.1695, 2.1072, 2.0562])}

- best_metric(return_step=False)[source]¶

Return the highest metric out of all tracked.

- Parameters:

return_step¶ (

bool) – IfTruewill also return the step with the highest metric value.- Return type:

Union[None,float,Tensor,tuple[Union[int,float,Tensor],Union[int,float,Tensor]],tuple[None,None],dict[str,Optional[float]],tuple[dict[str,Optional[float]],dict[str,Optional[int]]]]- Returns:

Either a single value or a tuple, depends on the value of

return_stepand the object being tracked.If a single metric is being tracked and

return_step=Falsethen a single tensor will be returnedIf a single metric is being tracked and

return_step=Truethen a 2-element tuple will be returned, where the first value is optimal value and second value is the corresponding optimal stepIf a metric collection is being tracked and

return_step=Falsethen a single dict will be returned, where keys correspond to the different values of the collection and the values are the optimal metric valueIf a metric collection is being bracked and

return_step=Truethen a 2-element tuple will be returned where each is a dict, with keys corresponding to the different values of th collection and the values of the first dict being the optimal values and the values of the second dict being the optimal step

In addition the value in all cases may be

Noneif the underlying metric does have a proper defined way of being optimal or in the case where a nested structure of metrics are being tracked.

- compute_all()[source]¶

Compute the metric value for all tracked metrics.

- Return type:

- Returns:

By default will try stacking the results from all increments into a single tensor if the tracked base object is a single metric. If a metric collection is provided a dict of stacked tensors will be returned. If the stacking process fails a list of the computed results will be returned.

- Raises:

ValueError – If self.increment have not been called before this method is called.

- increment()[source]¶

Create a new instance of the input metric that will be updated next.

- Return type:

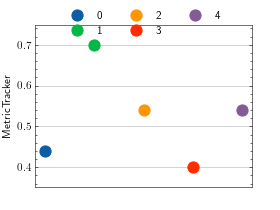

- plot(val=None, ax=None)[source]¶

Plot a single or multiple values from the metric.

- Parameters:

val¶ (

Union[Tensor,Sequence[Tensor],None]) – Either a single result from calling metric.forward or metric.compute or a list of these results. If no value is provided, will automatically call metric.compute and plot that result.ax¶ (

Optional[Axes]) – An matplotlib axis object. If provided will add plot to that axis

- Return type:

- Returns:

Figure and Axes object

- Raises:

ModuleNotFoundError – If matplotlib is not installed

>>> # Example plotting a single value >>> import torch >>> from torchmetrics.wrappers import MetricTracker >>> from torchmetrics.classification import BinaryAccuracy >>> tracker = MetricTracker(BinaryAccuracy(), maximize=True) >>> for epoch in range(5): ... tracker.increment() ... for batch_idx in range(5): ... tracker.update(torch.randint(2, (10,)), torch.randint(2, (10,))) >>> fig_, ax_ = tracker.plot() # plot all epochs