Learned Perceptual Image Patch Similarity (LPIPS)¶

Module Interface¶

- class torchmetrics.image.lpip.LearnedPerceptualImagePatchSimilarity(net_type='alex', reduction='mean', normalize=False, **kwargs)[source]¶

The Learned Perceptual Image Patch Similarity (LPIPS_) calculates perceptual similarity between two images.

LPIPS essentially computes the similarity between the activations of two image patches for some pre-defined network. This measure has been shown to match human perception well. A low LPIPS score means that image patches are perceptual similar.

Both input image patches are expected to have shape

(N, 3, H, W). The minimum size of H, W depends on the chosen backbone (see net_type arg).Hint

Using this metrics requires you to have

torchvisionpackage installed. Either install aspip install torchmetrics[image]orpip install torchvision.As input to

forwardandupdatethe metric accepts the following inputimg1(Tensor): tensor with images of shape(N, 3, H, W)img2(Tensor): tensor with images of shape(N, 3, H, W)

As output of forward and compute the metric returns the following output

lpips(Tensor): returns float scalar tensor with average LPIPS value over samples

- Parameters:

net_type¶ (

Literal['vgg','alex','squeeze']) – str indicating backbone network type to use. Choose between ‘alex’, ‘vgg’ or ‘squeeze’reduction¶ (

Optional[Literal['mean','sum','none']]) – str indicating how to reduce over the batch dimension. Choose between ‘sum’, ‘mean’,`’none’` or None.normalize¶ (

bool) – by default this isFalsemeaning that the input is expected to be in the [-1,1] range. If set toTruewill instead expect input to be in the[0,1]range.kwargs¶ (

Any) – Additional keyword arguments, see Advanced metric settings for more info.

- Raises:

ModuleNotFoundError – If

torchvisionpackage is not installedValueError – If

net_typeis not one of"vgg","alex"or"squeeze"ValueError – If

reductionis not one of"mean"or"sum"

Example

>>> from torch import rand >>> from torchmetrics.image.lpip import LearnedPerceptualImagePatchSimilarity >>> lpips = LearnedPerceptualImagePatchSimilarity(net_type='squeeze') >>> # LPIPS needs the images to be in the [-1, 1] range. >>> img1 = (rand(10, 3, 100, 100) * 2) - 1 >>> img2 = (rand(10, 3, 100, 100) * 2) - 1 >>> lpips(img1, img2) tensor(0.1024)

>>> from torch import rand, Generator >>> from torchmetrics.image.lpip import LearnedPerceptualImagePatchSimilarity >>> gen = Generator().manual_seed(42) >>> lpips = LearnedPerceptualImagePatchSimilarity(net_type='squeeze', reduction='none') >>> # LPIPS needs the images to be in the [-1, 1] range. >>> img1 = (rand(2, 3, 100, 100, generator=gen) * 2) - 1 >>> img2 = (rand(2, 3, 100, 100, generator=gen) * 2) - 1 >>> lpips(img1, img2) tensor([0.1024, 0.0938])

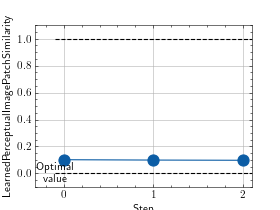

- plot(val=None, ax=None)[source]¶

Plot a single or multiple values from the metric.

- Parameters:

val¶ (

Union[Tensor,Sequence[Tensor],None]) – Either a single result from calling metric.forward or metric.compute or a list of these results. If no value is provided, will automatically call metric.compute and plot that result.ax¶ (

Optional[Axes]) – An matplotlib axis object. If provided will add plot to that axis

- Return type:

- Returns:

Figure and Axes object

- Raises:

ModuleNotFoundError – If matplotlib is not installed

>>> # Example plotting a single value >>> import torch >>> from torchmetrics.image.lpip import LearnedPerceptualImagePatchSimilarity >>> metric = LearnedPerceptualImagePatchSimilarity(net_type='squeeze') >>> metric.update(torch.rand(10, 3, 100, 100), torch.rand(10, 3, 100, 100)) >>> fig_, ax_ = metric.plot()

>>> # Example plotting multiple values >>> import torch >>> from torchmetrics.image.lpip import LearnedPerceptualImagePatchSimilarity >>> metric = LearnedPerceptualImagePatchSimilarity(net_type='squeeze') >>> values = [ ] >>> for _ in range(3): ... values.append(metric(torch.rand(10, 3, 100, 100), torch.rand(10, 3, 100, 100))) >>> fig_, ax_ = metric.plot(values)

Functional Interface¶

- torchmetrics.functional.image.learned_perceptual_image_patch_similarity(img1, img2, net_type='alex', reduction='mean', normalize=False)[source]¶

The Learned Perceptual Image Patch Similarity (LPIPS_) calculates perceptual similarity between two images.

LPIPS essentially computes the similarity between the activations of two image patches for some pre-defined network. This measure has been shown to match human perception well. A low LPIPS score means that image patches are perceptual similar.

Both input image patches are expected to have shape

(N, 3, H, W). The minimum size of H, W depends on the chosen backbone (see net_type arg).- Parameters:

net_type¶ (

Literal['alex','vgg','squeeze']) – str indicating backbone network type to use. Choose between ‘alex’, ‘vgg’ or ‘squeeze’reduction¶ (

Optional[Literal['sum','mean','none']]) – str indicating how to reduce over the batch dimension. Choose between ‘sum’, ‘mean’, ‘none’ or None.normalize¶ (

bool) – by default this isFalsemeaning that the input is expected to be in the [-1,1] range. If set toTruewill instead expect input to be in the[0,1]range.

- Return type:

Example

>>> from torch import rand >>> from torchmetrics.functional.image.lpips import learned_perceptual_image_patch_similarity >>> img1 = (rand(10, 3, 100, 100) * 2) - 1 >>> img2 = (rand(10, 3, 100, 100) * 2) - 1 >>> learned_perceptual_image_patch_similarity(img1, img2, net_type='squeeze') tensor(0.1005)

>>> from torch import rand, Generator >>> from torchmetrics.functional.image.lpips import learned_perceptual_image_patch_similarity >>> gen = Generator().manual_seed(42) >>> img1 = (rand(2, 3, 100, 100, generator=gen) * 2) - 1 >>> img2 = (rand(2, 3, 100, 100, generator=gen) * 2) - 1 >>> learned_perceptual_image_patch_similarity(img1, img2, net_type='squeeze', reduction='none') tensor([0.1024, 0.0938])