Adjusted Mutual Information Score¶

Module Interface¶

- class torchmetrics.clustering.AdjustedMutualInfoScore(average_method='arithmetic', **kwargs)[source]¶

Compute Adjusted Mutual Information Score.

\[AMI(U,V) = \frac{MI(U,V) - E(MI(U,V))}{avg(H(U), H(V)) - E(MI(U,V))}\]Where \(U\) is a tensor of target values, \(V\) is a tensor of predictions, \(M_p(U,V)\) is the generalized mean of order \(p\) of \(U\) and \(V\), and \(MI(U,V)\) is the mutual information score between clusters \(U\) and \(V\). The metric is symmetric, therefore swapping \(U\) and \(V\) yields the same mutual information score.

This clustering metric is an extrinsic measure, because it requires ground truth clustering labels, which may not be available in practice since clustering in generally is used for unsupervised learning.

As input to

forwardandupdatethe metric accepts the following input:preds(Tensor): single integer tensor with shape(N,)with predicted cluster labelstarget(Tensor): single integer tensor with shape(N,)with ground truth cluster labels

As output of

forwardandcomputethe metric returns the following output:ami_score(Tensor): A tensor with the Adjusted Mutual Information Score

- Parameters:

average_method¶ (

Literal['min','geometric','arithmetic','max']) – Method used to calculate generalized mean for normalization. Choose between'min','geometric','arithmetic','max'.kwargs¶ (

Any) – Additional keyword arguments, see Advanced metric settings for more info.

- Example::

>>> import torch >>> from torchmetrics.clustering import AdjustedMutualInfoScore >>> preds = torch.tensor([2, 1, 0, 1, 0]) >>> target = torch.tensor([0, 2, 1, 1, 0]) >>> ami_score = AdjustedMutualInfoScore(average_method="arithmetic") >>> ami_score(preds, target) tensor(-0.2500)

- plot(val=None, ax=None)[source]¶

Plot a single or multiple values from the metric.

- Parameters:

val¶ (

Union[Tensor,Sequence[Tensor],None]) – Either a single result from calling metric.forward or metric.compute or a list of these results. If no value is provided, will automatically call metric.compute and plot that result.ax¶ (

Optional[Axes]) – An matplotlib axis object. If provided will add plot to that axis

- Return type:

- Returns:

Figure and Axes object

- Raises:

ModuleNotFoundError – If matplotlib is not installed

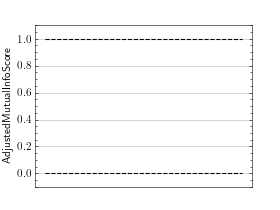

>>> # Example plotting a single value >>> import torch >>> from torchmetrics.clustering import AdjustedMutualInfoScore >>> metric = AdjustedMutualInfoScore() >>> metric.update(torch.randint(0, 4, (10,)), torch.randint(0, 4, (10,))) >>> fig_, ax_ = metric.plot(metric.compute())

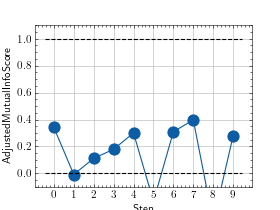

>>> # Example plotting multiple values >>> import torch >>> from torchmetrics.clustering import AdjustedMutualInfoScore >>> metric = AdjustedMutualInfoScore() >>> values = [] >>> for _ in range(10): ... values.append(metric(torch.randint(0, 4, (10,)), torch.randint(0, 4, (10,)))) >>> fig_, ax_ = metric.plot(values)

Functional Interface¶

- torchmetrics.functional.clustering.adjusted_mutual_info_score(preds, target, average_method='arithmetic')[source]¶

Compute adjusted mutual information between two clusterings.

- Parameters:

- Return type:

- Returns:

Scalar tensor with adjusted mutual info score between 0.0 and 1.0

Example

>>> from torchmetrics.functional.clustering import adjusted_mutual_info_score >>> preds = torch.tensor([2, 1, 0, 1, 0]) >>> target = torch.tensor([0, 2, 1, 1, 0]) >>> adjusted_mutual_info_score(preds, target, "arithmetic") tensor(-0.2500)