Deep Learning Fundamentals

- Deep Learning Fundamentals

- Unit 1Intro to ML and DL

- Unit 2Using Tensors w/ PyTorch

- Unit 3Model Training in PyTorch

- Unit 3.1Using Logistic Regression for Classification

- Unit 3.2The Logistic Regression Computation Graph

- Unit 3.3Model Training with Stochastic Gradient Descent

- Unit 3.4Automatic Differentiation in PyTorch

- Unit 3.5The PyTorch API

- Unit 3.6Training a Logistic Regression Model in PyTorch

- Unit 3.7 Feature Normalization

- Unit 3 ExercisesUnit 3 Exercies

- Unit 4Training Multilayer Neural Networks Overview

- Unit 4.1Logistic Regression for Multiple Classes

- Unit 4.2Multilayer Neural Networks

- Unit 4.3Training a Multilayer Neural Network in PyTorch

- Unit 4.4Defining Efficient Data Loaders

- Unit 4.5Multilayer Neural Networks for Regression

- Unit 4.6Speeding Up Model Training Using GPUs

- Unit 4 ExercisesUnit 4 Exercises

- Unit 5Organizing Your Code with Lightning

- Unit 5.1 Organizing Your Code with Lightning

- Unit 5.2Training a Multilayer Perceptron using the Lightning Trainer

- Unit 5.3Computing Metrics Efficiently with TorchMetrics

- Unit 5.4Making Code Reproducible

- Unit 5.5Organizing Your Data Loaders with Data Modules

- Unit 5.6The Benefits of Logging Your Model Training

- Unit 5.7Evaluating and Using Models on New Data

- Unit 5.8Add Functionality with Callbacks

- Unit 5 ExercisesUnit 5 Exercises

- Unit 6Essential Deep Learning Tips & Tricks

- Unit 6.1 Model Checkpointing and Early Stopping

- Unit 6.2Learning Rates and Learning Rate Schedulers

- Unit 6.3Using More Advanced Optimization Algorithms

- Unit 6.4Choosing Activation Functions

- Unit 6.5Automating The Hyperparameter Tuning Process

- Unit 6.6Improving Convergence with Batch Normalization

- Unit 6.7Reducing Overfitting With Dropout

- Unit 6.8Debugging Deep Neural Networks

- Unit 6 ExercisesUnit 6 Exercises

- Unit 7Getting Started with Computer Vision

- Unit 7.1Working With Images

- Unit 7.2How Convolutional Neural Networks Work

- Unit 7.3Convolutional Neural Network Architectures

- Unit 7.4Training Convolutional Neural Networks

- Unit 7.5Improving Predictions with Data Augmentation

- Unit 7.6Leveraging Pretrained Models with Transfer Learning

- Unit 7.7Using Unlabeled Data with Self-Supervised

- Unit 7 ExercisesUnit 7 Exercises

- Unit 8Natural Language Processing and Large Language Models

- Unit 8.1Working with Text Data

- Unit 8.2Training A Text Classifier Baseline

- Unit 8.3Introduction to Recurrent Neural Networks

- Unit 8.4From RNNs to the Transformer Architecture

- Unit 8.5Understanding Self-Attention

- Unit 8.6Large Language Models

- Unit 8.7A Large Language Model for Classification

- Unit 8 ExercisesUnit 8 Exercises

- Unit 9Techniques for Speeding Up Model Training

- Unit 10 The Finale: Our Next Steps After AI Model Training

Unit 6 Exercises

Exercise 1: Learning Rate Warmup

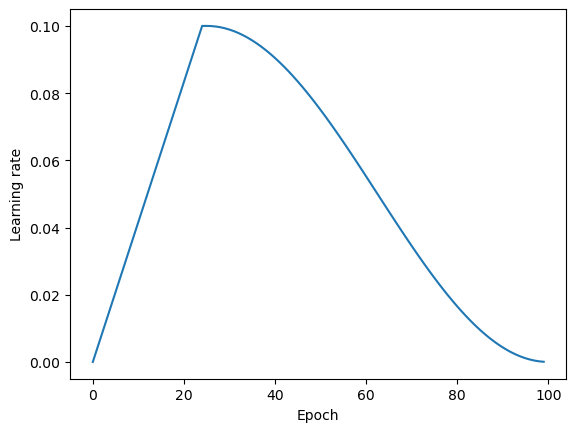

This exercise asks you to experiment with learning rate warmup during cosine annealing.

Learning rate warmup is a technique that involves gradually increasing the learning rate from a small value to a larger target value over a certain number of iterations or epochs. Learning rate warmup has empirically been shown to stabilize training and improve convergence.

In this notebook, we are adapting the cosine annealing code from Unit 6.2 Part 5.

In particular, your task is to replace the torch.optim.lr_scheduler.CosineAnnealingLR scheduler with a similar scheduler that supports warmup. For this, we are going to use the LinearWarmupCosineAnnealingLR class from the PyTorch Lightning Bolts library, which can be installed via

pip install pl-bolts

And you can find more about the LinearWarmupCosineAnnealingLR usage in the documentation here

Note that you can use the accompanying notebook as a template and fill in the marked blanks. If you use a schedule similar to the one shown in the image below, you should get ~89% accuracy.

Starter code: https://github.com/Lightning-AI/dl-fundamentals/tree/main/unit06-dl-tips/exercises/1_cosine-with-warmup

Exercise 2: Adam With Weight Decay

The task of this exercise is to experiment with weight decay. Note that we haven’t covered weight decay in the lecture. Related to Dropout (which we covered in Unit 6), weight decay is a regularization technique used in training neural networks to prevent overfitting.

Traditionally, a related method called L2-regularization is used to add a penalty term to the loss function (e.g., the cross entropy loss) that encourages the network to learn smaller weight coefficients. And smaller weights can often lead to less overfitting. That’s because smaller weights can help with overfitting. They encourage the model to learn simpler, more generalizable patterns from the training data, rather than fitting the noise or capturing complex, specific patterns that may not generalize well to new, unseen data.

If you want to learn more about L2 regularization, I covered it in this video here..

Now weight decay is a technique that is directly applied to the weight update rule in the optimizer, skipping the loss modification step. However, it has the same effect as L2-regularization.

For this, we are going to use the so-called AdamW optimizer. AdamW is a variant of the Adam optimization algorithm that decouples weight decay from the adaptive learning rate. In AdamW, weight decay is applied directly to the model’s weights instead of incorporating it into the gradients as in the standard Adam. This decoupling is particularly beneficial when using adaptive learning rate methods like Adam, as it allows weight decay to work more effectively as a regularizer.

If you are interested, you can read more about AdamW in the original research paper here..

And here is the relevant PyTorch documentation page for the AdamW optimizer.

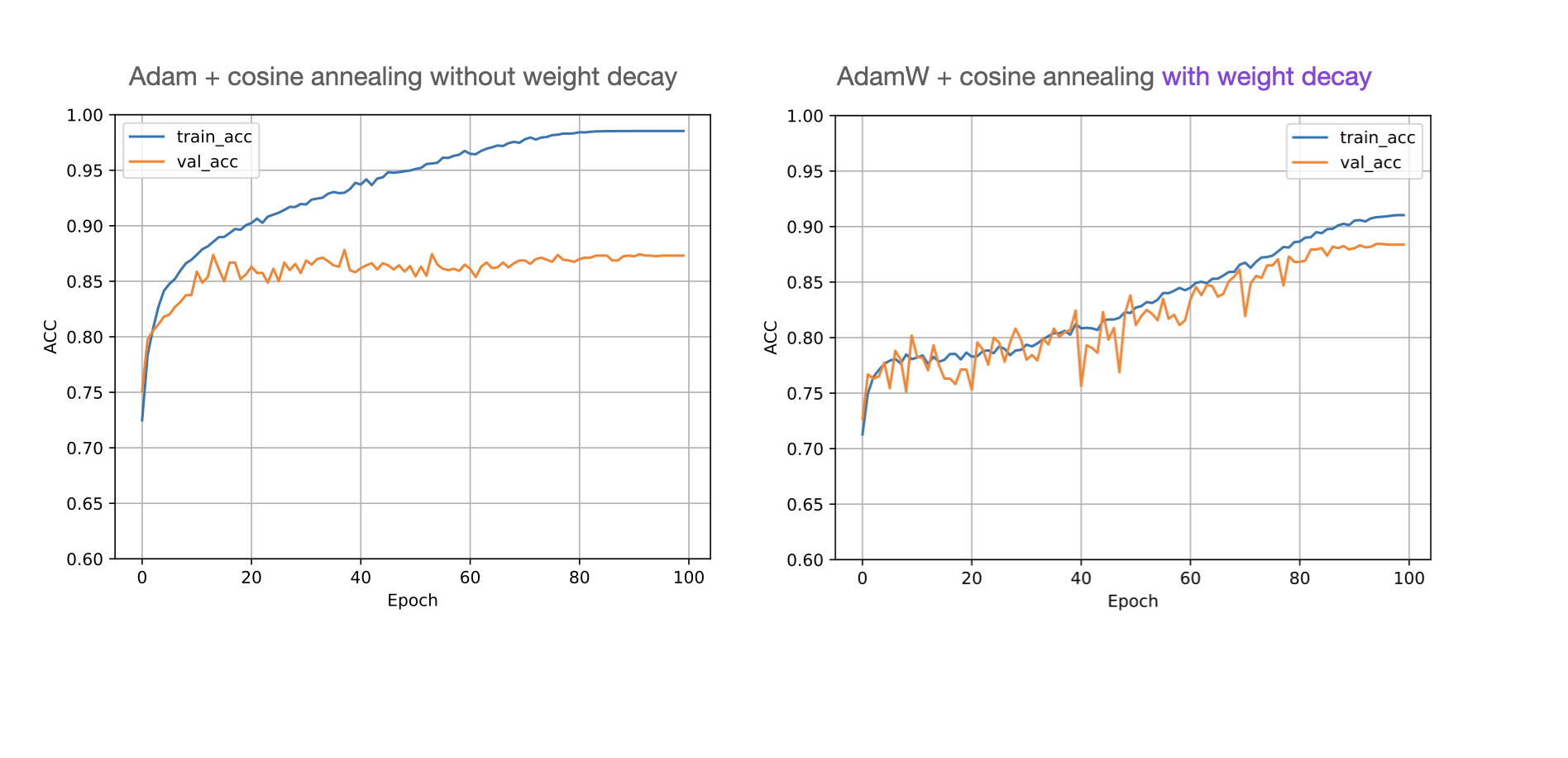

Your task is now to take the template code and swap the Adam optimizer with AdamW to reduce overfitting. You can mainly ignore the other hyperparameters and focus on changing the weight_decay parameter of AdamW.

Below is an illustration of how Adam compares to AdamW with a tuned weight decay parameter:

(Moreover, the test set performance should increase from 86% to 89%.)

Starter code: https://github.com/Lightning-AI/dl-fundamentals/tree/main/unit06-dl-tips/exercises/2_adam-with-weight-decay

Log in or create a free Lightning.ai account to access:

- Quizzes

- Completion badges

- Progress tracking

- Additional downloadable content

- Additional AI education resources

- Notifications when new units are released

- Free cloud computing credits

Watch Video 1

Unit 6 Exercises