Deep Learning Fundamentals

- Deep Learning Fundamentals

- Unit 1Intro to ML and DL

- Unit 2Using Tensors w/ PyTorch

- Unit 3Model Training in PyTorch

- Unit 3.1Using Logistic Regression for Classification

- Unit 3.2The Logistic Regression Computation Graph

- Unit 3.3Model Training with Stochastic Gradient Descent

- Unit 3.4Automatic Differentiation in PyTorch

- Unit 3.5The PyTorch API

- Unit 3.6Training a Logistic Regression Model in PyTorch

- Unit 3.7 Feature Normalization

- Unit 3 ExercisesUnit 3 Exercies

- Unit 4Training Multilayer Neural Networks Overview

- Unit 4.1Logistic Regression for Multiple Classes

- Unit 4.2Multilayer Neural Networks

- Unit 4.3Training a Multilayer Neural Network in PyTorch

- Unit 4.4Defining Efficient Data Loaders

- Unit 4.5Multilayer Neural Networks for Regression

- Unit 4.6Speeding Up Model Training Using GPUs

- Unit 4 ExercisesUnit 4 Exercises

- Unit 5Organizing Your Code with Lightning

- Unit 5.1 Organizing Your Code with Lightning

- Unit 5.2Training a Multilayer Perceptron using the Lightning Trainer

- Unit 5.3Computing Metrics Efficiently with TorchMetrics

- Unit 5.4Making Code Reproducible

- Unit 5.5Organizing Your Data Loaders with Data Modules

- Unit 5.6The Benefits of Logging Your Model Training

- Unit 5.7Evaluating and Using Models on New Data

- Unit 5.8Add Functionality with Callbacks

- Unit 5 ExercisesUnit 5 Exercises

- Unit 6Essential Deep Learning Tips & Tricks

- Unit 6.1 Model Checkpointing and Early Stopping

- Unit 6.2Learning Rates and Learning Rate Schedulers

- Unit 6.3Using More Advanced Optimization Algorithms

- Unit 6.4Choosing Activation Functions

- Unit 6.5Automating The Hyperparameter Tuning Process

- Unit 6.6Improving Convergence with Batch Normalization

- Unit 6.7Reducing Overfitting With Dropout

- Unit 6.8Debugging Deep Neural Networks

- Unit 6 ExercisesUnit 6 Exercises

- Unit 7Getting Started with Computer Vision

- Unit 7.1Working With Images

- Unit 7.2How Convolutional Neural Networks Work

- Unit 7.3Convolutional Neural Network Architectures

- Unit 7.4Training Convolutional Neural Networks

- Unit 7.5Improving Predictions with Data Augmentation

- Unit 7.6Leveraging Pretrained Models with Transfer Learning

- Unit 7.7Using Unlabeled Data with Self-Supervised

- Unit 7 ExercisesUnit 7 Exercises

- Unit 8Natural Language Processing and Large Language Models

- Unit 8.1Working with Text Data

- Unit 8.2Training A Text Classifier Baseline

- Unit 8.3Introduction to Recurrent Neural Networks

- Unit 8.4From RNNs to the Transformer Architecture

- Unit 8.5Understanding Self-Attention

- Unit 8.6Large Language Models

- Unit 8.7A Large Language Model for Classification

- Unit 8 ExercisesUnit 8 Exercises

- Unit 9Techniques for Speeding Up Model Training

- Unit 10 The Finale: Our Next Steps After AI Model Training

Unit 5 Exercises

Exercise 1 – Changing the Classifier to a Regression Model

Remember the regression model we trained in Unit 4.5? To get some hands-on practice with PyTorch and the LightningModule class, we are going to convert the MNIST classifier we used in this unit (Unit 5) and convert it to a regression model.

For this, we are going to use the Ames Housing Dataset from OpenML. But no worries, you don’t have to code the Dataset and DataModule classes yourself! I already took care of that and provide you with a working AmesHousingDataModule. However your task is to change the PyTorchMLP into a regression model, and you have to make adjustments to the LightningModel to track the mean squared error instead of the classification accuracy.

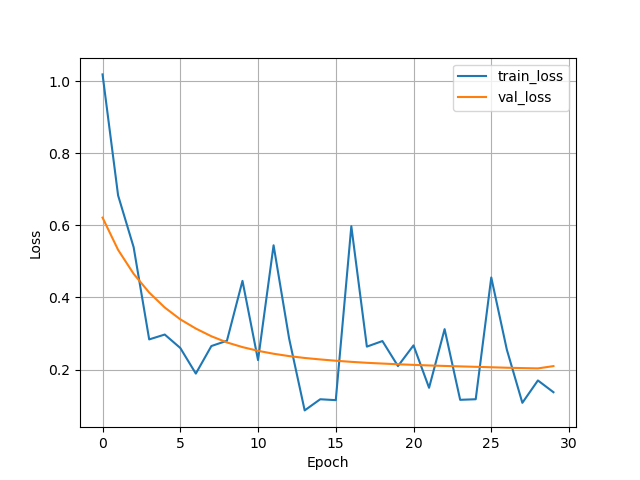

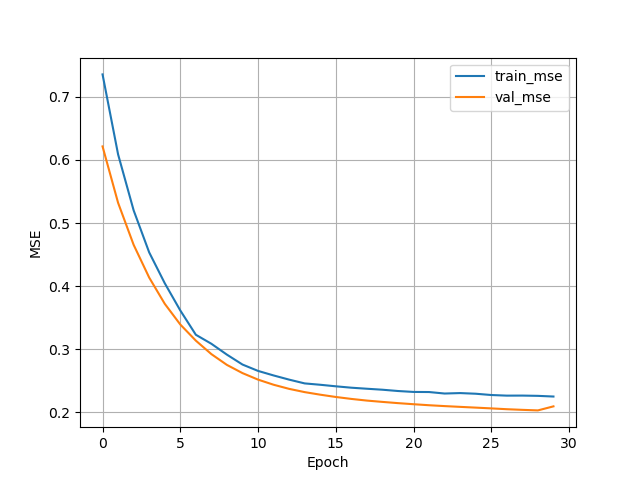

If you are successful, the mean squared errors for the training, validation, and test set should be around 0.2 to 0.3.

Here is a link to the start code: https://github.com/Lightning-AI/dl-fundamentals/tree/main/unit05-lightning/exercises/1_lightning-regression

And below are some tips and comments about changes you need to make.

As always, please don’t hesitate to reach out for help via the forums if you get stuck!

In training.py

- Use

TensorBoardor import theCSVLoggerand use it in the Trainer (see Unit 6) - Replace the

MNISTDataModule()with theAmesHousingDataModule()(already provided in theshared_utilities.pyfile) - Change the number of features in the

PyTorchMLP(...)class to 3, remove the classes - Change “acc” to “mse”

- Add the logging code at the bottom if you use the CSV logger. In that case, it would be:

metrics = pd.read_csv(f"{trainer.logger.log_dir}/metrics.csv")

aggreg_metrics = []

agg_col = "epoch"

for i, dfg in metrics.groupby(agg_col):

agg = dict(dfg.mean())

agg[agg_col] = i

aggreg_metrics.append(agg)

df_metrics = pd.DataFrame(aggreg_metrics)

df_metrics[["train_loss", "val_loss"]].plot(

grid=True, legend=True, xlabel="Epoch", ylabel="Loss"

)

df_metrics[["train_mse", "val_mse"]].plot(

grid=True, legend=True, xlabel="Epoch", ylabel="MSE"

)

plt.show()In shared_utilities.py

Modify the PyTorchMLP class as follows:

- Remove the

num_classesargument from thePyTorchMLP - Change the network to 1 output node & flatten the logits, (e.g,. using the

torch.flattenfunction`) - Optionally only use 1 instead of 2 hidden layers to reduce overfitting

Modify the LightningModel class as follows:

- Use

MeanSquaredErrorinstead ofAccuracyvia torchmetrics - Change the loss function accordingly

- Make sure you are returning the predictions correctly in

_shared_step, they are not class labels anymore - Change

acctomseeverywhere

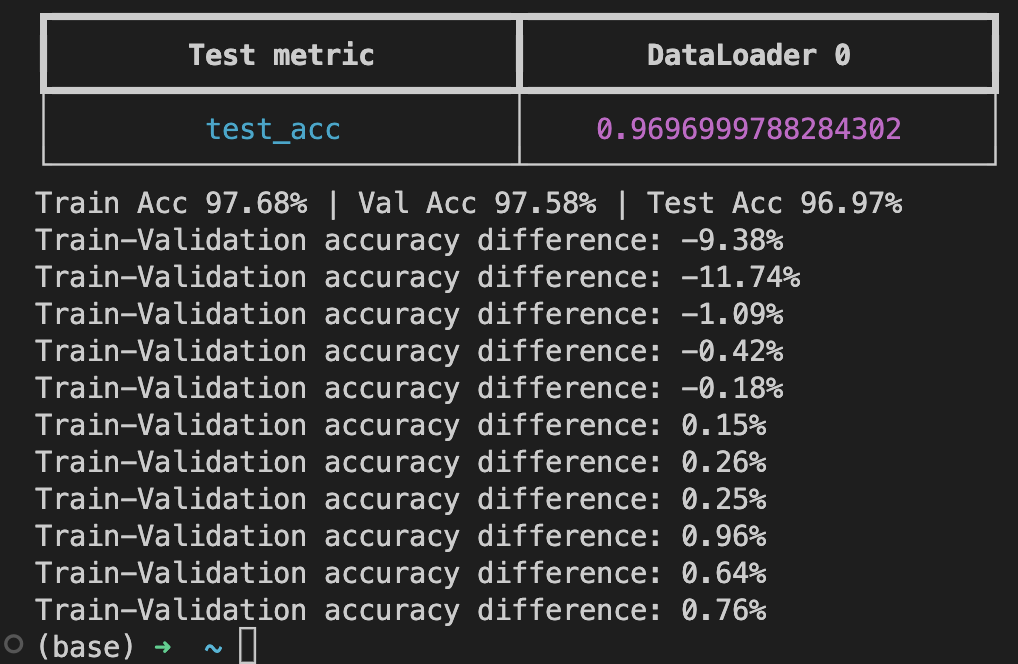

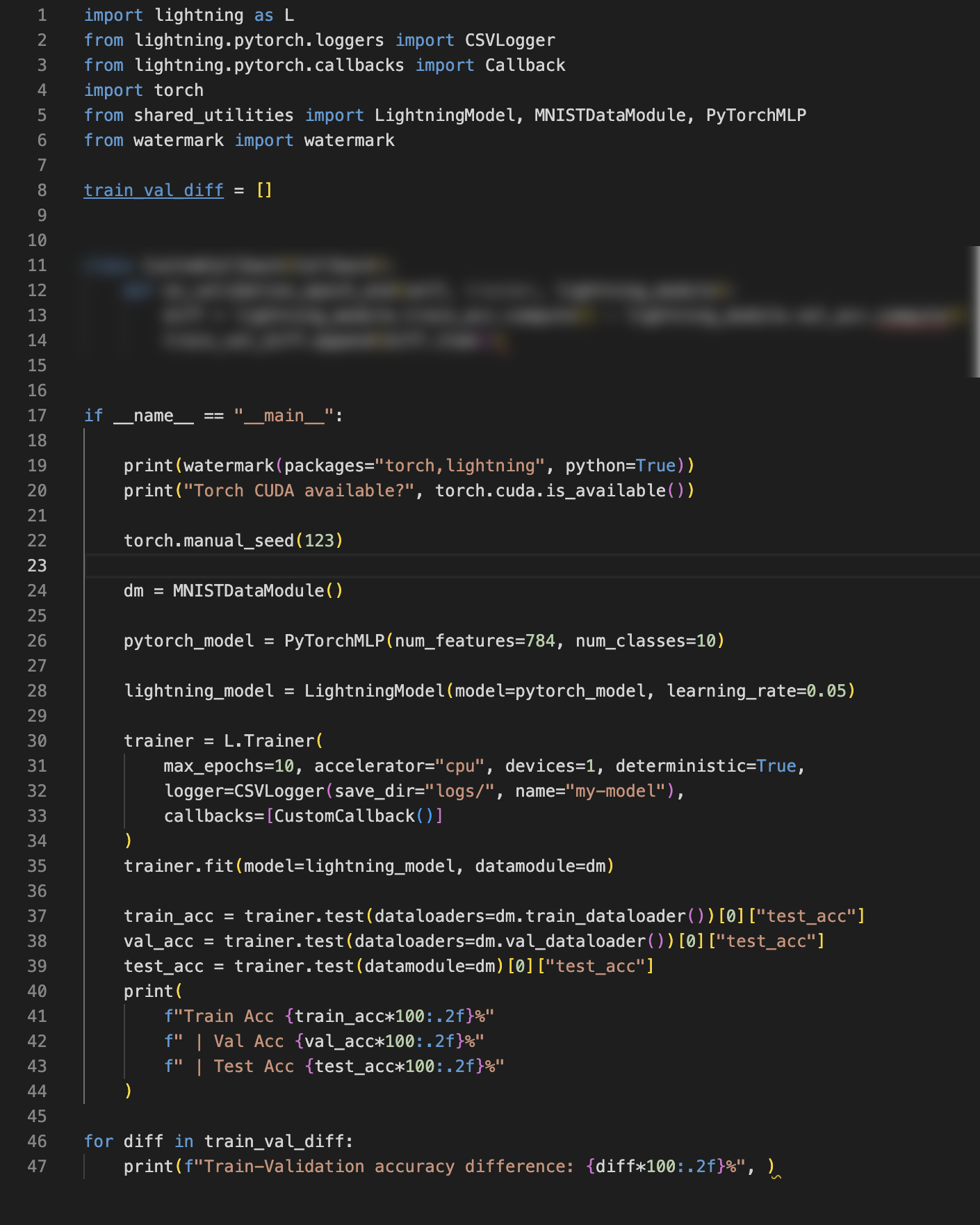

Exercise 2 – A Custom Plugin for Tracking Training and Validation Accuracy Difference

In this exercise, modify the existing MNIST classifier such that it tracks the difference between the training set and validation set accuracy after each epoch:

Here, it’s easiest to implement the plug in such that it computers the training set accuracy minus the validation accuracy after each epoch ends. This difference is then added to a list that you can print at the end of your script. The solution for this exercise involves only a few lines of code as hinted add in the screenshot of the solution below:

(Note that you could also compute these differences using the logfiles. However, this exercises aims to be an exercise practicing developing custom callbacks.)

Here is a link to the start code: https://github.com/Lightning-AI/dl-fundamentals/tree/main/unit05-lightning/exercises/2_custom-callback

As always, please don’t hesitate to reach out for help via the forums if you get stuck!

Log in or create a free Lightning.ai account to access:

- Quizzes

- Completion badges

- Progress tracking

- Additional downloadable content

- Additional AI education resources

- Notifications when new units are released

- Free cloud computing credits

Watch Video 1

Unit 5 Exercises