←

Back

to glossary

Adapter

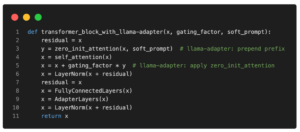

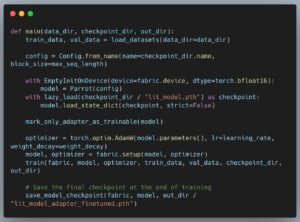

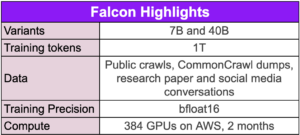

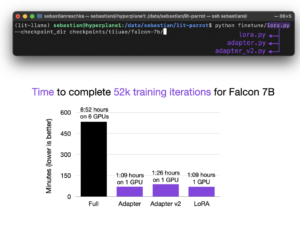

Adapter is a lightweight adaptation method that adds a small number of learnable parameters to a pre-trained model, allowing for efficient fine-tuning while preserving its original knowledge, enabling high-quality responses and improved performance on various tasks.