A New ML Model, a New Startup Opportunity

— give Echo, our free transcription app powered by OpenAI’s Whisper, a try!

Times are moving fast in the machine learning space. Just a short while ago, OpenAI released Whisper, a general-purpose speech recognition model. Not only did they open-source the model code, they also provided the model weights, making it fully usable in just a few lines of Python. When I saw how simple the code snippet was to use Whisper, my immediate thought was to build an app with the model at its core. Below, I’ll show you how I used Lightning to deploy Whisper by OpenAI.

There are several audio/video captioning services available, but most of them are proprietary and relatively expensive to use, charging upwards of $5/minute of video, and more for languages other than English. Additionally, these services cannot be used for certain industries like healthcare and finance because of data security policies. This creates the perfect opportunity for a new app to disrupt this industry that can run using low-cost commodity GPUs and even in a private AWS account. So I pretended to be a startup company and set out to build an app!

My background is not in machine learning. I would call myself a full-stack developer who loves building end-to-end features using React. The Lightning Framework looks and feels a lot like React, just written in Python, so it was very intuitive to learn and use as a backend for my transcription app. (You can look through Echo’s code here.)

I decided to call my app Echo, after the Greek nymph who was cursed to only repeat words that were spoken to her. Each piece of content that was transcribed would be turned into an “Echo”.

. . .

Creating an App from a Model

If you have worked at a startup before, you know that time is the most valuable resource. The faster you can take your great idea and turn it into a working prototype, the faster you can show it off to potential investors or customers.

With that in mind, our first goal was to validate that the Whisper model works and can be embedded into an app. The Whisper model runs much faster on a machine with a GPU. The Lightning Framework provides the perfect abstraction to run models and other compute-intensive workloads, called a “Work” (LightningWork in Python). All we need to do was to create a subclass of LightningWork , and copy the Whisper sample code into its run() method:

That’s all the code we need to use the Whisper model in our app! Now we just need to use this Work component in our app, which only takes a few lines of code:

After running the app locally using lightning run app [app.py] and verifying that it works, we can run it in the cloud and have the SpeechRecognizer Work run on a GPU by just adding --cloud: lightning run app --cloud app.py.

Within a few minutes, we have already created the building blocks of a speech recognition app that can be shown to potential investors or customers of our theoretical startup.

. . .

Rapid Feature Development Phase

Now that we’ve validated the core app idea, we can start building out features and take the app beyond just a demo into a real product. The Lightning Framework enabled a fast development cycle in the following ways.

Lightning Commands

The Lightning Framework provides a convenient way to generate a command-line interface (CLI) for your app, which acts as an alternative to a conventional React UI. We used this extensively to prototype and test new features of the app before we were ready to commit to building the frontend. CLI commands are generated by overriding the configure_commands() method:

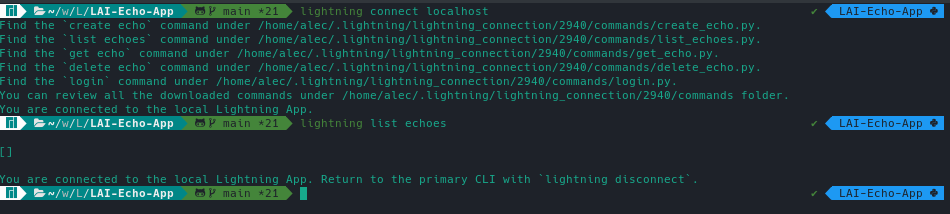

We then connect to our app via the Lightning CLI and invoke the commands we just defined:

Connect app via Lightning CLI and invoke commands

Generating a REST API

Once we were ready to start writing some React, we needed a way to communicate with the Python backend. REST APIs are commonly used for this purpose, which is why the Lightning Framework provides a way to generate one for your app, complete with an OpenAPI definition for automatic JavaScript/TypeScript client generation (using FastAPI). We were able to reuse all the logic from our configure_commands() by overriding the configure_api() method, then take the OpenAPI definition to generate our TypeScript client instead of writing it by hand, which takes time and can have hard-to-find bugs.

With our generated API client, it was straightforward to use [react-query] to write some data fetching hooks and we were free to build out several cool features of the app, which you can check out here!

. . .

Adding Enterprise Features

Since we were building an app that was targeting enterprise use-cases, our app needed some features that these customers have come to expect. The Lightning Framework makes these easy and quick to implement. Below are just a few highlights of some enterprise features in Echo.

Handling Scale

To handle processing multiple Echoes at a time, having more than one SpeechRecognizer Work running was necessary. However, GPU Works cost money, and we only want to run them if the load on our app is high enough. I made a [LoadBalancer] component which handles autoscaling up/down the number of Works and distributing the load evenly across them. Normally, load balancing and autoscaling are boring chores that developers have to do using ugly infrastructure-specific templates (no one likes writing YAML). But with the Lightning Framework, it was surprisingly fun to implement a load balancing algorithm in just a few lines of pure Python!

Configuration using Environment Variables and Secrets

Being able to change the behavior of your app without changing the code is known as configuration. Here are just a few things that can be configured in the Echo app:

- Minimum number of

SpeechRecognizerWorks (for autoscaling) - Which media sources are enabled (YouTube, device microphone, file upload)

- In our hosted app, you will notice that file upload is disabled. This is done using an environment variable! If you run the app yourself, you can enable this feature.

- Maximum YouTube video duration allowed

- Maximum number of Echoes per user

The full list of each configuration option, its associated environment variable name, and the default value can be found here. Lightning Apps support both environment variables and secrets.

Exception Monitoring with Sentry

Uncaught Python exceptions are annoying when running locally, but can be disastrous for a production app running in the cloud with many users. Tools like Sentry help us monitor exceptions in both the backend and frontend so that we can fix them quickly. This is optional but highly recommended for production apps.

Easy Deployment using GitHub Workflow

We were able to create a one-click deploy experience to lightning.ai using a simple GitHub Workflow that just calls lightning run app --cloud [app.py]. Use it as inspiration for your own app’s CI/CD!

. . .

Next Steps

There are still so many ideas for features we could add to Echo, but we wanted to launch a minimal version to users before we decided to add anything else. The source code is available on GitHub, and we hope that people will be inspired to fork the app and customize it with their own code. Here are some possible feature ideas if you need inspiration:

- Real-time transcription via streaming

- Email/Slack/SMS notification when your transcription completes

- Detecting different speakers in the audio and displaying them in a different color

I hope that I have managed to show you how fast a single developer can take the next hyped ML model and turn it into a real product in just a few weeks using the Lightning Framework. I’m excited to see what you build!