The Lightning Story

Today, we’re introducing PyTorch Lightning 2.0—as well as Fabric, a new library—to continue unlocking unprecedented scale, collaboration, and iteration for researchers and developers.

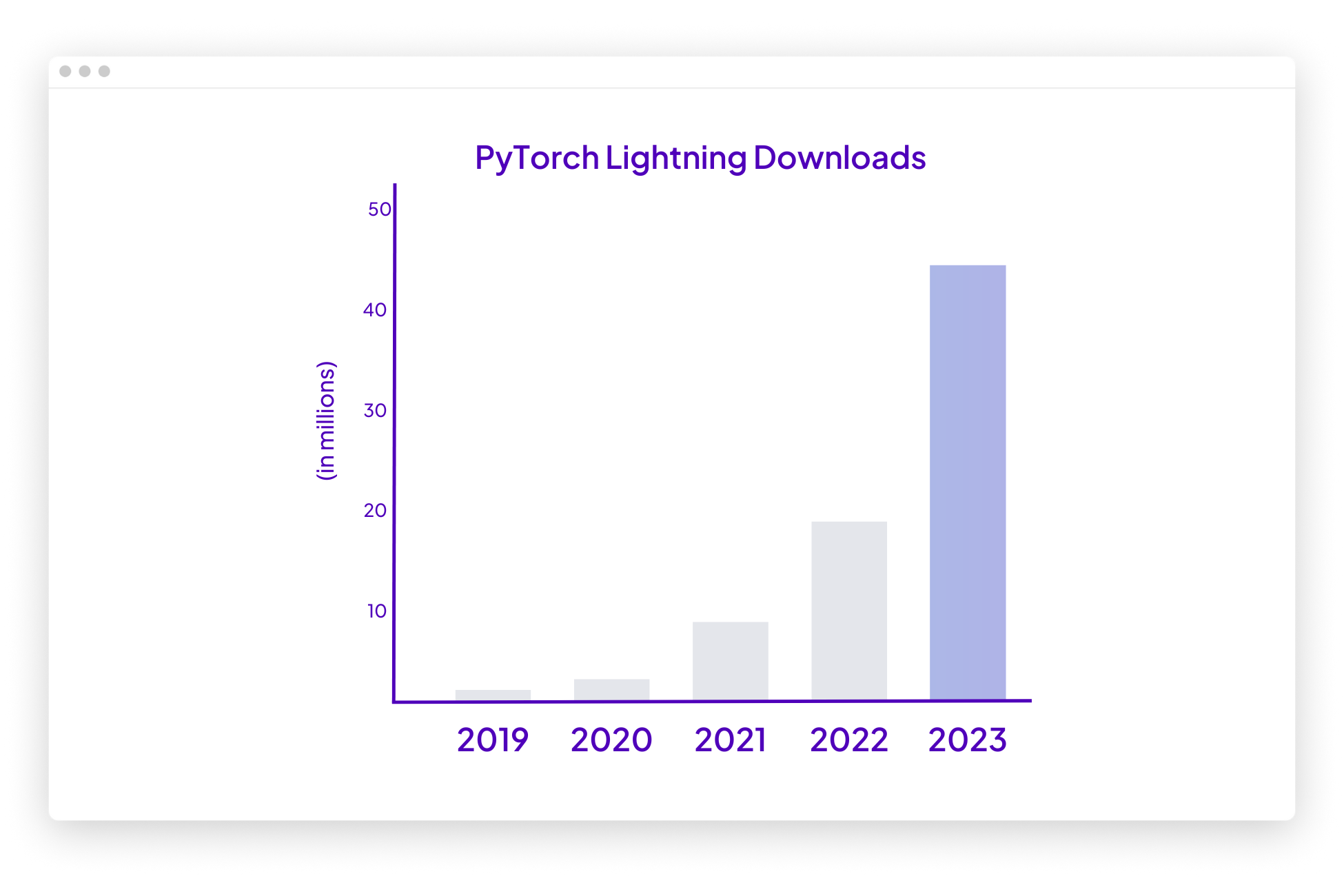

PyTorch Lightning launched 4 years ago, far exceeding our initial expectations by impacting research, startups, and enterprise. Researchers and developers quickly saw PyTorch Lightning as more than just a PyTorch wrapper, but also as a way to enable iteration, collaboration, and scale. Its 4 million monthly downloads (inching ever closer to 50 million total downloads) and the vibrant community that has been built around PyTorch Lightning are a testimony to the immense value it holds as an integral tool in the artificial intelligence and machine learning space.

PyTorch Lightning's impact

PyTorch Lightning has been adopted by thousands of entities, and currently powers research at leading academic institutions, enterprise stacks, and cutting-edge foundation models like Stable Diffusion and OpenFold. The LightningModule has become a standard for writing models in a self-contained way, enabling unprecedented levels of collaborations both within and across teams.

With great power…

To continue being the best tool for researchers, PyTorch Lightning has had to adapt quickly to the fast-changing field of AI. Enabling features to work together flawlessly while keeping the internals simple is a design challenge. Making PyTorch Lightning fast and easy to use carried with it a certain amount of code complexity. At the same time, some of the features and integrations we introduced over the years are no longer as relevant in 2023.

This is why we decided it was time to call the PyTorch Lightning 1.x series mature with 1.9.x. PyTorch Lightning 2.0 comes with support for PyTorch 2.0 and has a stable API that incorporates all the insights acquired during the 1.x journey. The simplified Trainer codebase is also more readable, with fewer abstractions and a tighter set of officially supported integrations.

Sometimes, full control is what you need

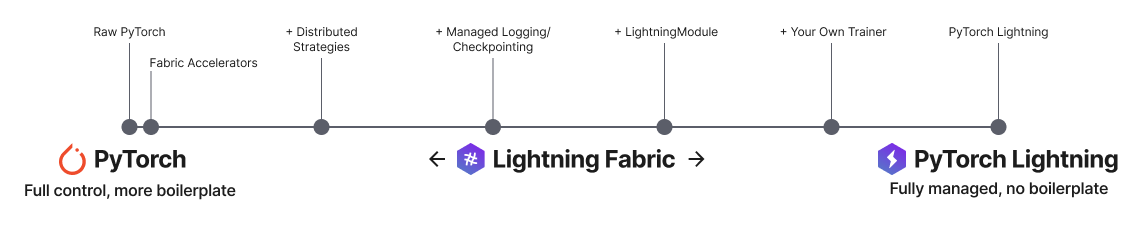

Rather than increase the complexity of the PyTorch Lightning Trainer to provide additional extensibility points, we decided to instead unbundle the PyTorch Lightning Trainer, and expose its basic building blocks in the form of a new library: Lightning Fabric.

Lightning Fabric creates a continuum between raw PyTorch and the fully-managed PyTorch Lightning experience. It allows you to supercharge your PyTorch code through accelerators, distributed strategies, and mixed precision, while still retaining full control on your training loop.

Lightning Fabric

By changing less than 10 lines of code, you can now take any codebase implementing a PyTorch model and have Fabric manage accelerators and distributed strategies, allowing you to scale up locally, using DeepSpeed or FSDP, or in a SLURM cluster. You can also leverage the LightningModule and Callbacks to maximize collaboration and code reuse, and still keep your training loops under your control.

How to hop on

Migrating to Lightning 2.0 is easy with our detailed migration guide. In the next few days, we’ll be introducing a migration script that will scan your code and suggest required changes.

PyTorch Lighting 1.9.x will still be maintained, but no new features will land. If you are relying on features that have been removed in 2.0, you can still use them in PyTorch Lightning from the 1.9.x line, or build them using the building blocks from Lightning Fabric.

Game on!

Enjoy the revamped documentation and get all the goodness with pip install lightning.

See you at the Official 2.0 Launch Party on the Lightning AI Discord server on March 16!

Cheers!

Luca Antiga

CTO, Lightning AI