As a celebration of v1.0.0 of Torchmetrics being released, in this blogpost we will discuss the history of Torchmetrics and how the interface have evolved over time.

It all started in the pretty early days of Pytorch Lightning. More specifically on April 1st, 2020. On this date, the first proposal for a metric interface was submitted to PyTorch Lightning, after an extensive discussion between William Falcon (the founder of PyTorch Lightning), Jiri Borovec (the first fulltime developer of PyTorch Lightning), Tullie Murell, Davide Testuggine (both working at FAIR at this time) and Justus Schock (interested in contributing such a package; later became the first Tech Lead for metrics in PyTorch Lightning). This discussion was predated by a more general discussion in the PyTorch issues, showing the general need for a unified metrics package.

The initial draft was then quickly refined by a few other PRs and finally landed on April 3rd (2020) with the following main interface introduced by this PR:

class Metric(torch.nn.Module, ABC):

"""

Abstract Base Class for metric implementation.

Should be used to implement metrics that

1. Return multiple Outputs

2. Handle their own DDP sync

"""

def __init__(self, name: str):

...

def forward(self, *args, **kwargs) -> torch.Tensor:

"""

Implements the actual metric computation.

Returns:

metric value

"""

raise NotImplementedError

...

It also included a lot of utilities to make dtype and devices available from the Module itself — a lot of which can still be found today in the DeviceDtypeModuleMixin that currently lives in lightning.fabric.

The core design elements of the Metric class at this time were:

- follow the

nn.Module-API as closely as possible since people were already familiar with it - enable distributed communications for metrics

- make it easy to integrate metrics from

scikit-learn

The last point was especially important for the beginning. While being inefficient when it comes to numpy.ndarray ↔ torch.CudaTensor conversions (this introduces a GPU-synchronization point and GPU↔CPU memory transfer), scikit-learn metrics were considered the gold standard for metric computation and their correctness. Implementing most metrics with a scikit-learn backend also allowed us to quickly increase the number of metrics while not worrying too much about their correctness.

On May 19th, a PR to further differentiate between NumpyMetric and TensorMetric landed, introducing also one of the most commonly used functions throughout PyTorch Lightning’s codebase to this date: apply_to_collection (which was later outsourced to our utilities package to make use of this function even in our projects outside of the lightning package). This PR marks the beginning of proper support for distributed aggregation in PyTorch Lightning.

On June 10th (2020) then finally the first sklearn metrics were added directly to the package by this PR. At first it were only a few, most commonly used ones (only classification at this point) like Accuracy, AveragePrecision, AUC, ConfusionMatrix, F1, FBeta, Precision, Recall, PrecisionRecallCurve, ROC and AUROC. On June 13th (2020) a lot of these metrics were already replaced by native PyTorch implementations in https://github.com/Lightning-AI/lightning/pull/1488/files.

June 15th (2020) is another important date for TorchMetrics: It marks the first contribution to the PyTorch Lightning.metrics package by Nicki Skafte with his first bugfix.

All of these changes were then released in PyTorch Lightning 0.8.0.

After the release of 0.8.0, Nicki ramped up his contributions ranging from adding missing scikit-learn metrics over completely new PyTorch-native metrics like embedding similarity, our first API refactor, speeding up our tests by a factor of 26 to the introduction of MetricCollection. It is safe to say, that Nicki was a core-developer of the metrics subpackage by this point!

With the release of PyTorch Lightning 0.10.0, another important Milestone was passed: An entire API overhaul by Teddy Koker and Ananya Harsh Jha. From now on, metrics implemented the today well known API of update-compute:

class MyMetric(Metric):

def __init__(self, ...):

super().__init__(self)

...

def update(self, ...):

...

def compute(self):

...

In this case, a Metric has an internal state, which is updated by update. This internal representation is in many cases more memory efficient than just storing all samples in memory and waiting for a call to compute. This allows the computation of metrics over datasets which don’t fit in memory since for correct calculation, the entire dataset is needed. This is one of the downsides of scikit-learn: It doesn’t implement batched metric calculation, which is crucial for deep learning. The internal state is also what’s efficiently synced between GPUs when calculating metrics in a distributed fashion to ensure mathematical correctness.

While the interface is nearly 3 years old at this point it has not change significantly, which lead to Torchmetrics have a stable user interface before it was even called Torchmetrics. On February 22 (2021) then, the existing metrics code was moved to it’s own package (torchmetrics), while amazingly preserving our entire commit history by Jiri Borovec.

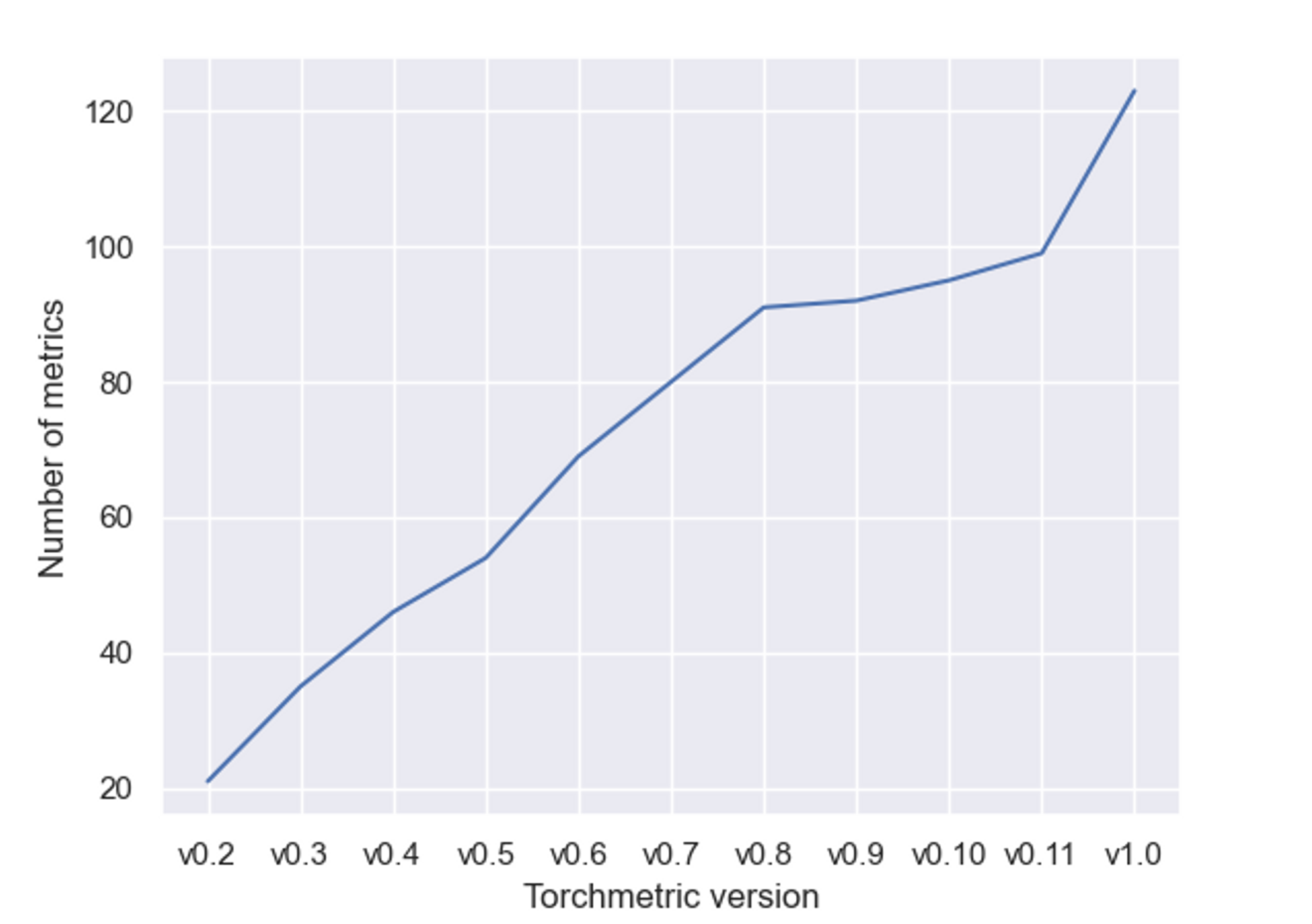

Since then, many more metrics and fixes were contributed by many different contributors. The framework as grown from a around 20 metrics to well above 100 in the newest release. Additionally, while the initial version only covered Regression and Classification metrics, Torchmetrics today covers many more: Audio, Detection, Image, Retrieval, Text etc.

In February 2022 we then invited the most active contributors to write a paper with us. The result was the paper TorchMetrics - Measuring Reproducibility in PyTorch by Nicki Skafte, Jiri Borovec, Justus Schock, Ananya Harsh, Teddy Koker, Luca Di Liello, Daniel Stancl, Chansheng Quang, Maxim Grechkin and William Falcon which was published in the Journal of Open-Source Software (JOSS) on February, 11th 2022.

After a bunch of more fixes and metrics (led by Nicki Skafte, current Tech Lead of TorchMetrics), we are proud to finally announce the 1.0.0 release on July 5th 2023 with over 100 metric implementations and also launching the newest features: plotting and visualizing metrics.

We are sure, this only marks the beginning of a successful journey for TorchMetrics and there’ll be many more metrics to come!

Finally, If you would like to give open source contribution a try, we have the #want-to-contribute and #torchmetrics channel on the Lightning AI Discord where you can ask a question and get guidance.