Lightning is handing out the blueprints of Stable Diffusion and researchers are helping AI become better at cooperating with humans in strategic game play. Microsoft open sources its ‘farm of the future’ toolkit, the Metaverse gains “social presence” with new high-end headset and AWS accelerates compute power with its latest release. Let’s dive in!

Featured Story

Lightning released Muse: an entirely open-source text-to-image generator built on Stable Diffusion, and a blueprint for building intelligent cloud applications based on diffusion models. Give it a try!

Research Highlights

🗺️ Researchers from Comcast and University of Waterloo proposed DAAM, attribution mapping for Stable Diffusion based on upscaling and aggregating cross-attention activations in the latent denoising subnetwork. Understanding which parts of a generated image an input word influences most was at the center of this research– a question largely unexplored by the ML community. DAAM is the first to derive and evaluate an unsupervised open-vocabulary semantic segmentation approach for generated images and provides great insight into how speech relates to images.

🧠 Researchers from the University of Amsterdam introduced LieGG, a method to retrieve generators of symmetry groups learned by a neural network from the data. The research, submitted to NeurIPS 2022, strays from the idea that robust networks should learn symmetries directly from the data in order to suit a task function when symmetries are not integrated into a model beforehand. Instead, the researchers propose a novel method that extracts symmetries directly in the form of corresponding Lie group generators. As a result, LieGG claims that deeper networks are better symmetry learners than wider ones, neural networks progressively refine symmetries from shallow to deep layers and that the number of parameters does matter.

🥒 Researchers from Meta and MIT introduced DiL-piKL, a learning algorithm to address the shortcomings of AI when it comes to cooperating with humans in strategy games– not just beating them. In order to learn how to cooperate, AI must understand human conventions, and to achieve this DiL-piKL combines self-play reinforcement learning (RL) with human data. By merging human imitation, planning, and reinforcement learning, the algorithm was able to train an agent for no-press Diplomacy that placed first in a human tournament.

ML Engineering Highlights

🎮 Mark Zuckerberg and the executives at Meta’s Reality labs introduced several exciting advancements in the world of VR at this week’s Meta Connect 2022. Most notably, the team unveiled a high-end VR headset, Meta Quest Pro, that has inward-facing sensors to capture natural facial expressions and eye tracking– a significant investment toward creating more “social presence” for realizing the promise of the Metaverse.

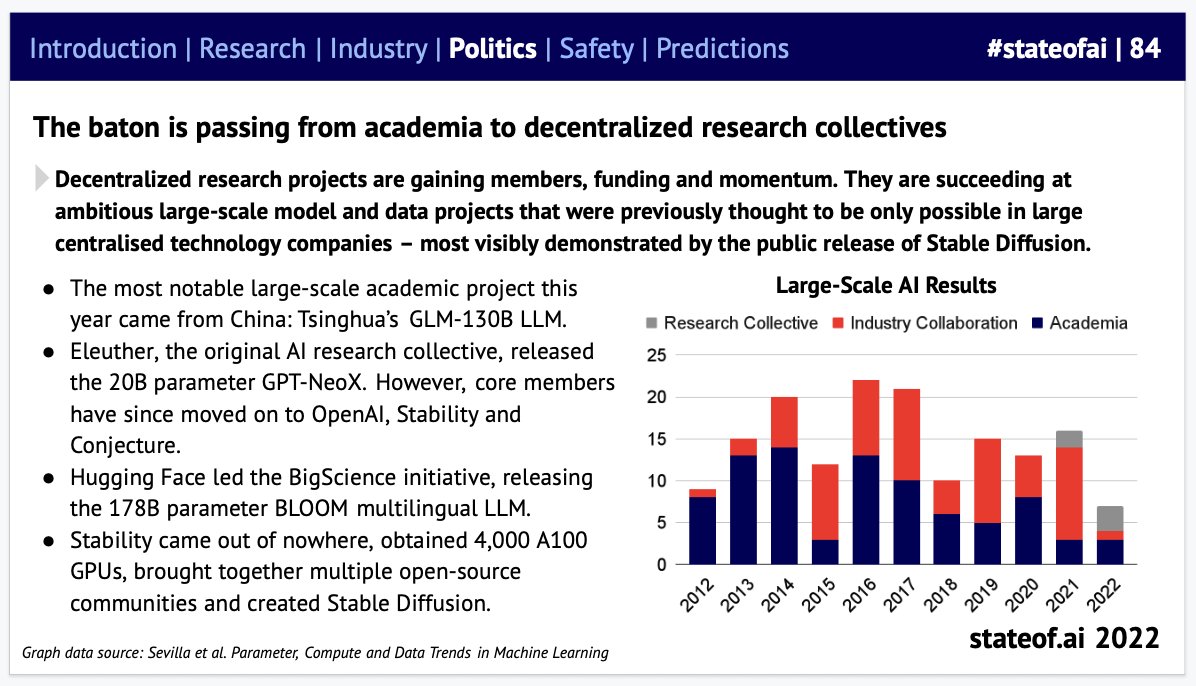

🗞️The 5th annual State of AI Report, produced by AI investors Nathan Benaich and Ian Hogarth, has been released. Key themes for 2022 include the rise of independent research labs, AI safety and China dominating the world of ML research

💽 AWS announced the general availability of Amazon Elastic Compute Cloud (Amazon EC2) Trn1 instances, powered by the company’s Trainium chips. Trainium chips were first introduced in December 2020 and are designed specifically for “high-performance ML training applications in the cloud.” AWS claims that Trn1 instances are able to scale to up to 30,000 Trainium accelerators, equating to a supercomputer with 6.3 exaflops of computing.

Open Source Highlights

☁️ Google Cloud introduced the OpenXLA open source machine learning compiler ecosystem at its Next ’22 conference. By allowing developers to select the frameworks and hardware they want for their ML projects, OpenXLA attempts to address the problem of framework and hardware incompatibilities, which can hinder the development of machine learning algorithms. OpenXLA is a community-led and open source ecosystem of ML compiler and infrastructure projects co-developed by Google and other AI/ML developers including AMD, Arm, Meta, NVIDIA, AWS, Intel, and Apple.

🚜 Microsoft open sourced FarmVibes.AI, a collection of artificial intelligence models that farm operators can use to perform tasks such as planting crops more efficiently. As part of a broader Microsoft program known as Project FarmVibes, FarmVibes.AI includes four AI algorithms that are designed to help farm operators collect data about their crops and use it to optimize day-to-day work.

Tutorial of the Week

Interested in learning how to build intelligent cloud applications on top of large models like Stable Diffusion? We just released Muse, our open-source text-to-image generator, and a tutorial for building your own.

Community Spotlight

Want your work featured? Contact us on Slack or email us at [email protected]

🎃Lightning is participating in Hacktoberfest! Check out our call for contributors here. We welcome contributions from people who are interested in building distributed, scalable Python apps or an integration with an MLOps tool as either a Lightning Component or App.

🚜 The Wadhwani Institute for Artificial Intelligence open-sourced a codebase for performing object detection using Lightning and Hydra. The project helps cotton farmers make better pest management decisions by providing pesticide advice based on photos of pests caught in specialised traps. To provide the correct advice, specific pests must be identified within a photo and then counted. This code in this respository is focused on accomplishing that task.

🤫 Rumor has it that Lightning will be at NeurIPS this year! 👀 If you’re attending NeurIPS and are interested in exploring the transition from academia to industry (and whether or not that’s even a real thing), stay tuned for details.

Don’t Miss the Submission Deadline

- AISTATS 2023: The 26th International Conference on Artificial Intelligence and Statistics. Spring 2023. (Location TBD). Paper Submission Deadline: Fri Oct 14 2022 04:59:59 GMT-0700

- AAMAS 2023: The 22nd International Conference on Autonomous Agents and Multiagent Systems. May 29 – June 2, 2023. (London, UK) Paper Submission Deadline: Sat Oct 29 2022 04:59:59 GMT-0700

- CVPR 2023: The IEEE/CVF Conference on Computer Vision and Pattern Recognition. Jun 18-22, 2023. (Vancouver, Canada). Paper Submission Deadline: Fri Nov 11 2022 23:59:59 GMT-0800

Upcoming Conferences

- ICIP 2022: International Conference on Image Processing. International Conference on Image Processing. Oct 16-19, 2022 (Bordeaux, France)

- IROS 2022: International Conference on Intelligent Robots and Systems. Oct 23-27, 2022 (Kyoto, Japan)

- NeurIPS | 2022: Thirty-sixth Conference on Neural Information Processing Systems. Nov 28 – Dec 9. (New Orleans, Louisiana)

- PyTorch Conference: Brings together leading academics, researchers and developers from the Machine Learning community to learn more about software releases on PyTorch. Dec 2, 2022 (New Orleans, Louisiana)