Support for Apple Silicon Processors in PyTorch, with Lightning

tl;dr this tutorial shows you how to train models faster with Apple’s M1 or M2 chips.

With the release of PyTorch 1.12 in May of this year, PyTorch added experimental support for the Apple Silicon processors through the Metal Performance Shaders (MPS) backend. If you own an Apple computer with an M1 or M2 chip and have the latest version of PyTorch installed, you can now train models faster.

You can use the MPS device in PyTorch like so:

Even better news: in the latest 1.7 release of PyTorch Lightning, we made it super easy to switch to the MPS backend without any code changes!

Support for this accelerator is currently marked as experimental in PyTorch. Because many operators are still missing, you may run into a few rough edges. PyTorch and Lightning will continue to improve support for Apple Silicon, so stay tuned for future releases!

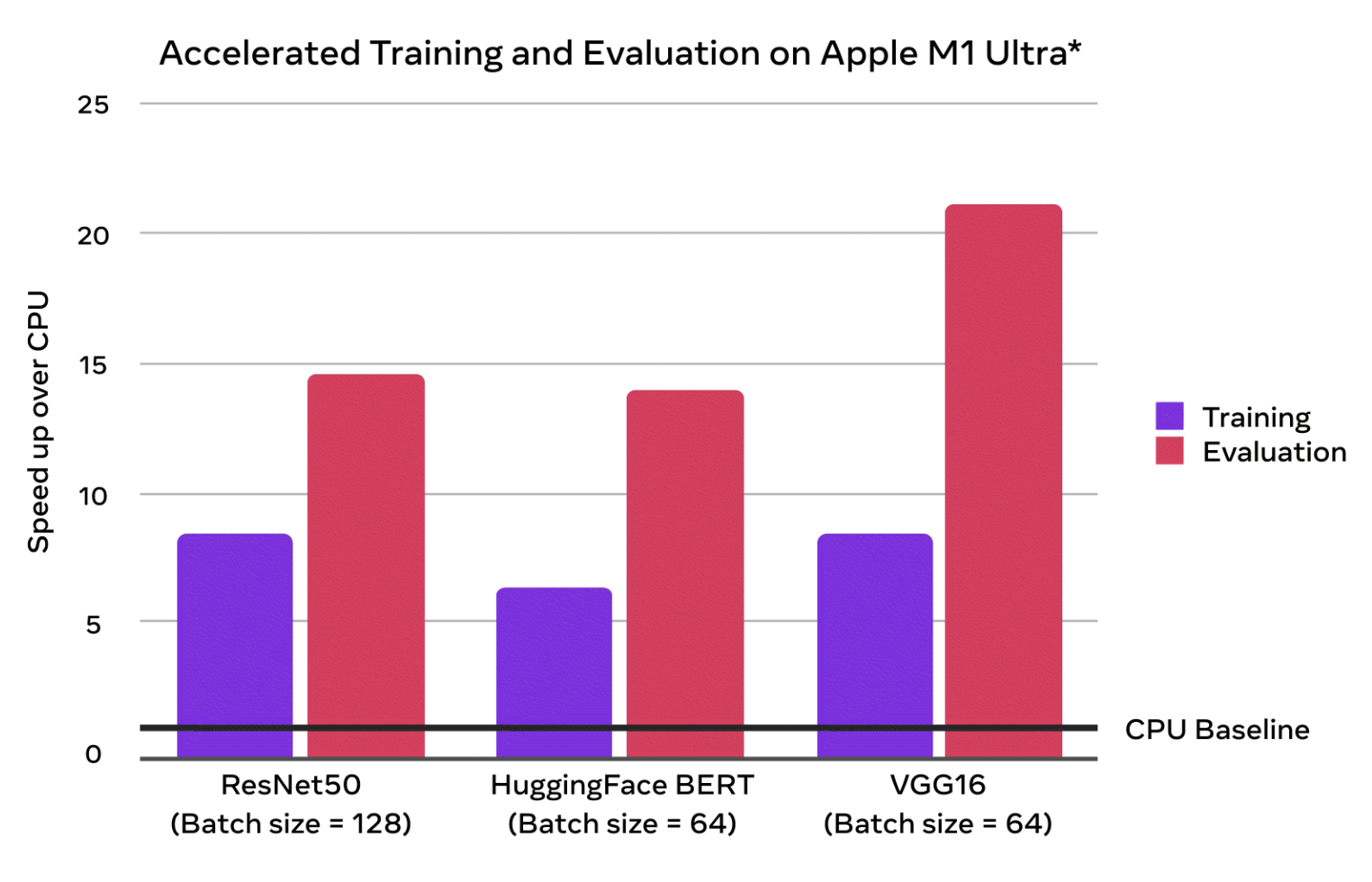

The PyTorch team itself has run extensive benchmarks using the MPS backend and demonstrate significant speedups over the CPU version.

PyTorch shows the speedup MPS brings compared to running on CPU. Source: pytorch.org

Since the initial release in May, the community has published more performance benchmarks with various devices and models, for example:

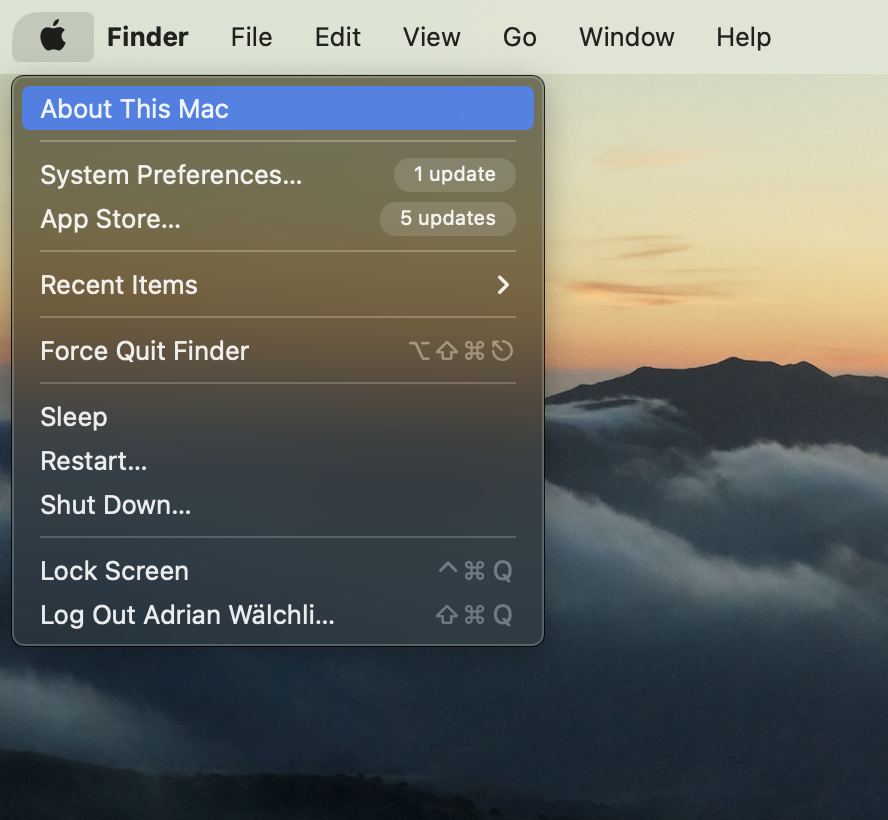

Does My Mac Support It?

It is easy to find out if your Mac has an M1 or M2 chip inside. In the top left corner of your screen, click the Apple symbol and go to “About This Mac”.

In the popup window, you see a summary of your Mac including the chip name. If it says M1 or M2, you can run PyTorch and Lightning code using the MPS backend!

Important before you install Lightning and/or PyTorch: If you are using Anaconda/Miniconda for your virtual environments, you should select the Apple M1 installation, not “Intel”. Otherwise, you won’t be able to use the MPS backend in PyTorch (and Lightning). Here is a simple check to make sure your Python isn’t getting tricked:

If this prints “arm”, you’re good! If it returns “i386”, that means Python thinks you are on an Intel processor, and that’s no good! In this case, you should re-install your conda with ARM support.

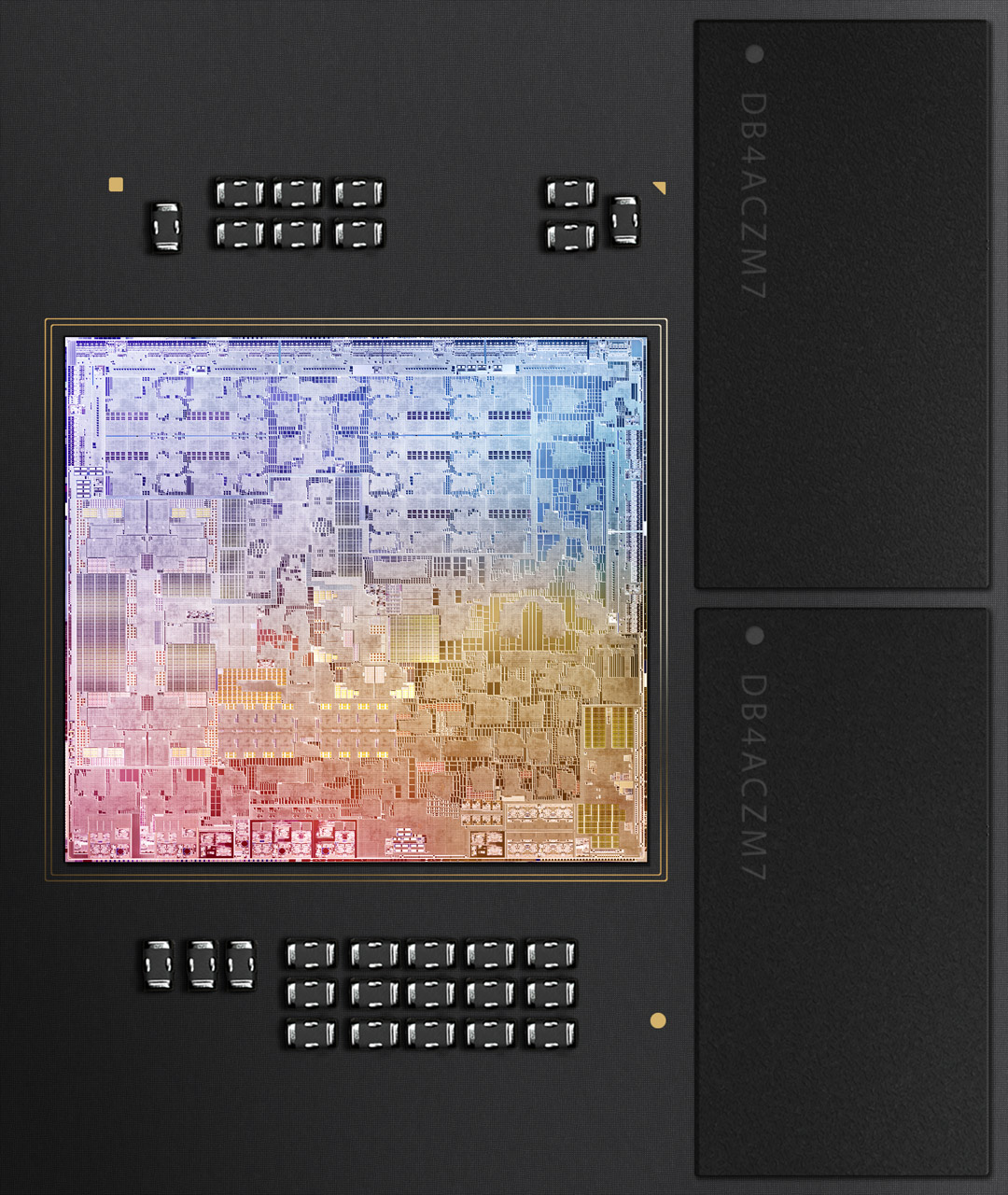

How Does Apple Silicon Work?

Apple Silicon refers to Apple’s new system on a chip (SoC) processors launched in late 2020. SoC is a design that puts all the important devices in a computer onto a single chip, this includes: the main CPU cores, the GPU (for graphics and AI), a shared memory that all components can access directly, I/O controllers, storage controllers, and so on. Because all these components are on a single chip and and very close together, the circuits can be tightly integrated and optimized for better performance.

Apple M2 Chip inside the MacBook Pro 13. Source: Apple

Among the components on this chip is Apple’s Metal GPU, and its main purpose is for rendering graphics to the screen. However, GPU cores can also be accessed by applications for general purposes, and are especially useful when computations can be parallelized to a high degree, such as in a tensor library like PyTorch! This is what the MPS backend does: It maps all torch operations (matrix multiplication, convolution, etc.) in your computational graph to special kernels implemented in Apple’s Metal shader language.

PyTorch has already integrated the kernels for many common operations, but not all of them yet. It will take some time until this effort is completed, so stay tuned for future updates.

If you are running into issues with unsupported ops, you can try to upgrade the PyTorch package to the nightly version by selecting “Preview (Nightly)” on the PyTorch website, which should come with more improvements to MPS support.

Conclusion

We hope that users who rock a Mac M1 or M2 model get a kick out of this release! With the latest advancements in PyTorch and Lightning, you can develop models even faster right on your laptop, without the boilerplate. And when it is time to crank things up a notch, remember that Lightning AI has got you covered: It is easier than ever to bring your models to the cloud to train and deploy at scale, on beefy hardware that will crush your M1 Max.