Mean-Average-Precision (mAP)¶

Module Interface¶

- class torchmetrics.detection.mean_ap.MeanAveragePrecision(box_format='xyxy', iou_type='bbox', iou_thresholds=None, rec_thresholds=None, max_detection_thresholds=None, class_metrics=False, **kwargs)[source]

Compute the Mean-Average-Precision (mAP) and Mean-Average-Recall (mAR) for object detection predictions.

where

is the average precision for class

is the average precision for class  and

and  is the number of classes. The average

precision is defined as the area under the precision-recall curve. If argument class_metrics is set to

is the number of classes. The average

precision is defined as the area under the precision-recall curve. If argument class_metrics is set to True, the metric will also return the mAP/mAR per class.As input to

forwardandupdatethe metric accepts the following input:preds(List): A list consisting of dictionaries each containing the key-values (each dictionary corresponds to a single image). Parameters that should be provided per dictboxes: (

FloatTensor) of shape(num_boxes, 4)containingnum_boxesdetection boxes of the format specified in the constructor. By default, this method expects(xmin, ymin, xmax, ymax)in absolute image coordinates.scores:

FloatTensorof shape(num_boxes)containing detection scores for the boxes.labels:

IntTensorof shape(num_boxes)containing 0-indexed detection classes for the boxes.masks:

boolof shape(num_boxes, image_height, image_width)containing boolean masks. Only required when iou_type=”segm”.

target(List) A list consisting of dictionaries each containing the key-values (each dictionary corresponds to a single image). Parameters that should be provided per dict:boxes:

FloatTensorof shape(num_boxes, 4)containingnum_boxesground truth boxes of the format specified in the constructor. By default, this method expects(xmin, ymin, xmax, ymax)in absolute image coordinates.labels:

IntTensorof shape(num_boxes)containing 0-indexed ground truth classes for the boxes.masks:

boolof shape(num_boxes, image_height, image_width)containing boolean masks. Only required when iou_type=”segm”.

As output of

forwardandcomputethe metric returns the following output:map_dict: A dictionary containing the following key-values:map: (

Tensor)map_small: (

Tensor)map_medium:(

Tensor)map_large: (

Tensor)mar_1: (

Tensor)mar_10: (

Tensor)mar_100: (

Tensor)mar_small: (

Tensor)mar_medium: (

Tensor)mar_large: (

Tensor)map_50: (

Tensor) (-1 if 0.5 not in the list of iou thresholds)map_75: (

Tensor) (-1 if 0.75 not in the list of iou thresholds)map_per_class: (

Tensor) (-1 if class metrics are disabled)mar_100_per_class: (

Tensor) (-1 if class metrics are disabled)classes (

Tensor)

For an example on how to use this metric check the torchmetrics mAP example.

Note

mapscore is calculated with @[ IoU=self.iou_thresholds | area=all | max_dets=max_detection_thresholds ]. Caution: If the initialization parameters are changed, dictionary keys for mAR can change as well. The default properties are also accessible via fields and will raise anAttributeErrorif not available.Note

This metric is following the mAP implementation of pycocotools, a standard implementation for the mAP metric for object detection.

Note

This metric requires you to have torchvision version 0.8.0 or newer installed (with corresponding version 1.7.0 of torch or newer). This metric requires pycocotools installed when iou_type is segm. Please install with

pip install torchvisionorpip install torchmetrics[detection].- Parameters

box_format¶ (

str) – Input format of given boxes. Supported formats are[`xyxy`, `xywh`, `cxcywh`].iou_type¶ (

str) – Type of input (either masks or bounding-boxes) used for computing IOU. Supported IOU types are["bbox", "segm"]. If using"segm", masks should be provided (seeupdate()).iou_thresholds¶ (

Optional[List[float]]) – IoU thresholds for evaluation. If set toNoneit corresponds to the stepped range[0.5,...,0.95]with step0.05. Else provide a list of floats.rec_thresholds¶ (

Optional[List[float]]) – Recall thresholds for evaluation. If set toNoneit corresponds to the stepped range[0,...,1]with step0.01. Else provide a list of floats.max_detection_thresholds¶ (

Optional[List[int]]) – Thresholds on max detections per image. If set to None will use thresholds[1, 10, 100]. Else, please provide a list of ints.class_metrics¶ (

bool) – Option to enable per-class metrics for mAP and mAR_100. Has a performance impact.kwargs¶ (

Any) – Additional keyword arguments, see Advanced metric settings for more info.

- Raises

ModuleNotFoundError – If

torchvisionis not installed or version installed is lower than 0.8.0ModuleNotFoundError – If

iou_typeis equal toseqmandpycocotoolsis not installedValueError – If

class_metricsis not a booleanValueError – If

predsis not of type (List[Dict[str, Tensor]])ValueError – If

targetis not of typeList[Dict[str, Tensor]]ValueError – If

predsandtargetare not of the same lengthValueError – If any of

preds.boxes,preds.scoresandpreds.labelsare not of the same lengthValueError – If any of

target.boxesandtarget.labelsare not of the same lengthValueError – If any box is not type float and of length 4

ValueError – If any class is not type int and of length 1

ValueError – If any score is not type float and of length 1

Example

>>> from torch import tensor >>> from torchmetrics.detection import MeanAveragePrecision >>> preds = [ ... dict( ... boxes=tensor([[258.0, 41.0, 606.0, 285.0]]), ... scores=tensor([0.536]), ... labels=tensor([0]), ... ) ... ] >>> target = [ ... dict( ... boxes=tensor([[214.0, 41.0, 562.0, 285.0]]), ... labels=tensor([0]), ... ) ... ] >>> metric = MeanAveragePrecision() >>> metric.update(preds, target) >>> from pprint import pprint >>> pprint(metric.compute()) {'classes': tensor(0, dtype=torch.int32), 'map': tensor(0.6000), 'map_50': tensor(1.), 'map_75': tensor(1.), 'map_large': tensor(0.6000), 'map_medium': tensor(-1.), 'map_per_class': tensor(-1.), 'map_small': tensor(-1.), 'mar_1': tensor(0.6000), 'mar_10': tensor(0.6000), 'mar_100': tensor(0.6000), 'mar_100_per_class': tensor(-1.), 'mar_large': tensor(0.6000), 'mar_medium': tensor(-1.), 'mar_small': tensor(-1.)}

Initializes internal Module state, shared by both nn.Module and ScriptModule.

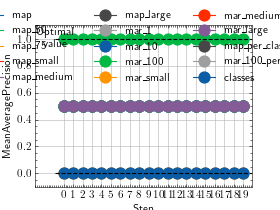

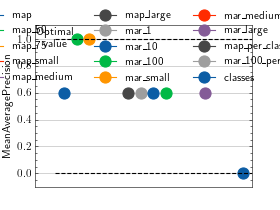

- plot(val=None, ax=None)[source]

Plot a single or multiple values from the metric.

- Parameters

val¶ (

Union[Dict[str,Tensor],Sequence[Dict[str,Tensor]],None]) – Either a single result from calling metric.forward or metric.compute or a list of these results. If no value is provided, will automatically call metric.compute and plot that result.ax¶ (

Optional[Axes]) – An matplotlib axis object. If provided will add plot to that axis

- Return type

- Returns

Figure object and Axes object

- Raises

ModuleNotFoundError – If matplotlib is not installed

>>> from torch import tensor >>> from torchmetrics.detection.mean_ap import MeanAveragePrecision >>> preds = [dict( ... boxes=tensor([[258.0, 41.0, 606.0, 285.0]]), ... scores=tensor([0.536]), ... labels=tensor([0]), ... )] >>> target = [dict( ... boxes=tensor([[214.0, 41.0, 562.0, 285.0]]), ... labels=tensor([0]), ... )] >>> metric = MeanAveragePrecision() >>> metric.update(preds, target) >>> fig_, ax_ = metric.plot()

(

Source code,png,hires.png,pdf)

>>> # Example plotting multiple values >>> import torch >>> from torchmetrics.detection.mean_ap import MeanAveragePrecision >>> preds = lambda: [dict( ... boxes=torch.tensor([[258.0, 41.0, 606.0, 285.0]]) + torch.randint(10, (1,4)), ... scores=torch.tensor([0.536]) + 0.1*torch.rand(1), ... labels=torch.tensor([0]), ... )] >>> target = [dict( ... boxes=torch.tensor([[214.0, 41.0, 562.0, 285.0]]), ... labels=torch.tensor([0]), ... )] >>> metric = MeanAveragePrecision() >>> vals = [] >>> for _ in range(20): ... vals.append(metric(preds(), target)) >>> fig_, ax_ = metric.plot(vals)

(

Source code,png,hires.png,pdf)