CLIP Score¶

Module Interface¶

- class torchmetrics.multimodal.clip_score.CLIPScore(model_name_or_path='openai/clip-vit-large-patch14', **kwargs)[source]¶

Calculates CLIP Score which is a text-to-image similarity metric.

CLIP Score is a reference free metric that can be used to evaluate the correlation between a generated caption for an image and the actual content of the image. It has been found to be highly correlated with human judgement. The metric is defined as:

\[\text{CLIPScore(I, C)} = max(100 * cos(E_I, E_C), 0)\]which corresponds to the cosine similarity between visual CLIP embedding \(E_i\) for an image \(i\) and textual CLIP embedding \(E_C\) for an caption \(C\). The score is bound between 0 and 100 and the closer to 100 the better.

Note

Metric is not scriptable

As input to

forwardandupdatethe metric accepts the following inputimages(Tensoror list of tensors): tensor with images feed to the feature extractor with. Ifa single tensor it should have shape

(N, C, H, W). If a list of tensors, each tensor should have shape(C, H, W).Cis the number of channels,HandWare the height and width of the image.

text(strorlistofstr): text to compare with the images, one for each image.

As output of forward and compute the metric returns the following output

clip_score(Tensor): float scalar tensor with mean CLIP score over samples

- Parameters:

model_name_or_path¶ (

Literal['openai/clip-vit-base-patch16','openai/clip-vit-base-patch32','openai/clip-vit-large-patch14-336','openai/clip-vit-large-patch14']) –string indicating the version of the CLIP model to use. Available models are:

”openai/clip-vit-base-patch16”

”openai/clip-vit-base-patch32”

”openai/clip-vit-large-patch14-336”

”openai/clip-vit-large-patch14”

kwargs¶ (

Any) – Additional keyword arguments, see Advanced metric settings for more info.

- Raises:

ModuleNotFoundError – If transformers package is not installed or version is lower than 4.10.0

Example

>>> import torch >>> from torchmetrics.multimodal.clip_score import CLIPScore >>> metric = CLIPScore(model_name_or_path="openai/clip-vit-base-patch16") >>> score = metric(torch.randint(255, (3, 224, 224), generator=torch.manual_seed(42)), "a photo of a cat") >>> score.detach() tensor(24.4255)

- plot(val=None, ax=None)[source]¶

Plot a single or multiple values from the metric.

- Parameters:

val¶ (

Union[Tensor,Sequence[Tensor],None]) – Either a single result from calling metric.forward or metric.compute or a list of these results. If no value is provided, will automatically call metric.compute and plot that result.ax¶ (

Optional[Axes]) – An matplotlib axis object. If provided will add plot to that axis

- Return type:

- Returns:

Figure and Axes object

- Raises:

ModuleNotFoundError – If matplotlib is not installed

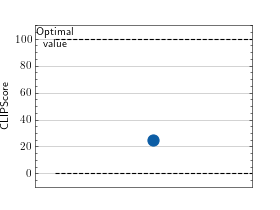

>>> # Example plotting a single value >>> import torch >>> from torchmetrics.multimodal.clip_score import CLIPScore >>> metric = CLIPScore(model_name_or_path="openai/clip-vit-base-patch16") >>> metric.update(torch.randint(255, (3, 224, 224)), "a photo of a cat") >>> fig_, ax_ = metric.plot()

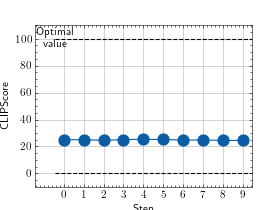

>>> # Example plotting multiple values >>> import torch >>> from torchmetrics.multimodal.clip_score import CLIPScore >>> metric = CLIPScore(model_name_or_path="openai/clip-vit-base-patch16") >>> values = [ ] >>> for _ in range(10): ... values.append(metric(torch.randint(255, (3, 224, 224)), "a photo of a cat")) >>> fig_, ax_ = metric.plot(values)

- update(images, text)[source]¶

Update CLIP score on a batch of images and text.

- Parameters:

- Raises:

ValueError – If not all images have format [C, H, W]

ValueError – If the number of images and captions do not match

- Return type:

Functional Interface¶

- torchmetrics.functional.multimodal.clip_score.clip_score(images, text, model_name_or_path='openai/clip-vit-large-patch14')[source]¶

Calculate CLIP Score which is a text-to-image similarity metric.

CLIP Score is a reference free metric that can be used to evaluate the correlation between a generated caption for an image and the actual content of the image. It has been found to be highly correlated with human judgement. The metric is defined as:

\[\text{CLIPScore(I, C)} = max(100 * cos(E_I, E_C), 0)\]which corresponds to the cosine similarity between visual CLIP embedding \(E_i\) for an image \(i\) and textual CLIP embedding \(E_C\) for an caption \(C\). The score is bound between 0 and 100 and the closer to 100 the better.

Note

Metric is not scriptable

- Parameters:

images¶ (

Union[Tensor,List[Tensor]]) – Either a single [N, C, H, W] tensor or a list of [C, H, W] tensorstext¶ (

Union[str,List[str]]) – Either a single caption or a list of captionsmodel_name_or_path¶ (

Literal['openai/clip-vit-base-patch16','openai/clip-vit-base-patch32','openai/clip-vit-large-patch14-336','openai/clip-vit-large-patch14']) – string indicating the version of the CLIP model to use. Available models are “openai/clip-vit-base-patch16”, “openai/clip-vit-base-patch32”, “openai/clip-vit-large-patch14-336” and “openai/clip-vit-large-patch14”,

- Raises:

ModuleNotFoundError – If transformers package is not installed or version is lower than 4.10.0

ValueError – If not all images have format [C, H, W]

ValueError – If the number of images and captions do not match

- Return type:

Example

>>> import torch >>> _ = torch.manual_seed(42) >>> from torchmetrics.functional.multimodal import clip_score >>> score = clip_score(torch.randint(255, (3, 224, 224)), "a photo of a cat", "openai/clip-vit-base-patch16") >>> score.detach() tensor(24.4255)